Documentation

¶

Documentation

¶

Overview ¶

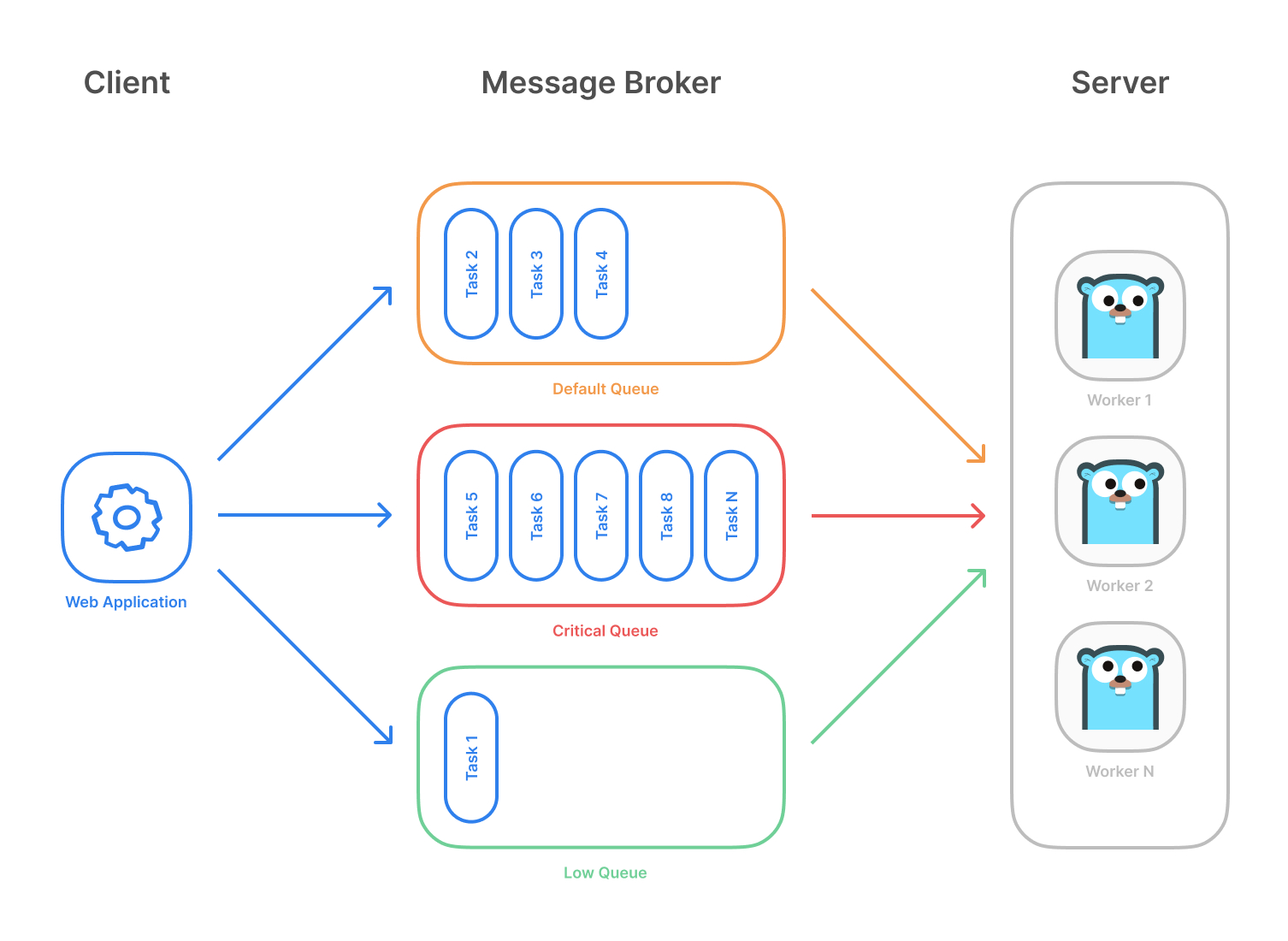

Package asynq provides a framework for Redis based distrubted task queue.

Asynq uses Redis as a message broker. To connect to redis, specify the connection using one of RedisConnOpt types.

redisConnOpt = asynq.RedisClientOpt{

Addr: "127.0.0.1:6379",

Password: "xxxxx",

DB: 2,

}

The Client is used to enqueue a task.

client := asynq.NewClient(redisConnOpt)

// Task is created with two parameters: its type and payload.

// Payload data is simply an array of bytes. It can be encoded in JSON, Protocol Buffer, Gob, etc.

b, err := json.Marshal(ExamplePayload{UserID: 42})

if err != nil {

log.Fatal(err)

}

task := asynq.NewTask("example", b)

// Enqueue the task to be processed immediately.

info, err := client.Enqueue(task)

// Schedule the task to be processed after one minute.

info, err = client.Enqueue(t, asynq.ProcessIn(1*time.Minute))

The Server is used to run the task processing workers with a given handler.

srv := asynq.NewServer(redisConnOpt, asynq.Config{

Concurrency: 10,

})

if err := srv.Run(handler); err != nil {

log.Fatal(err)

}

Handler is an interface type with a method which takes a task and returns an error. Handler should return nil if the processing is successful, otherwise return a non-nil error. If handler panics or returns a non-nil error, the task will be retried in the future.

Example of a type that implements the Handler interface.

type TaskHandler struct {

// ...

}

func (h *TaskHandler) ProcessTask(ctx context.Context, task *asynq.Task) error {

switch task.Type {

case "example":

var data ExamplePayload

if err := json.Unmarshal(task.Payload(), &data); err != nil {

return err

}

// perform task with the data

default:

return fmt.Errorf("unexpected task type %q", task.Type)

}

return nil

}

Index ¶

- Variables

- func DefaultRetryDelayFunc(n int, e error, t *Task) time.Duration

- func GetMaxRetry(ctx context.Context) (n int, ok bool)

- func GetQueueName(ctx context.Context) (queue string, ok bool)

- func GetRetryCount(ctx context.Context) (n int, ok bool)

- func GetTaskID(ctx context.Context) (id string, ok bool)

- func NotFound(ctx context.Context, task *Task) error

- type Client

- type ClusterNode

- type Config

- type DailyStats

- type ErrorHandler

- type ErrorHandlerFunc

- type GroupAggregator

- type GroupAggregatorFunc

- type GroupInfo

- type Handler

- type HandlerFunc

- type Inspector

- func (i *Inspector) ArchiveAllAggregatingTasks(queue, group string) (int, error)

- func (i *Inspector) ArchiveAllPendingTasks(queue string) (int, error)

- func (i *Inspector) ArchiveAllRetryTasks(queue string) (int, error)

- func (i *Inspector) ArchiveAllScheduledTasks(queue string) (int, error)

- func (i *Inspector) ArchiveTask(queue, id string) error

- func (i *Inspector) CancelProcessing(id string) error

- func (i *Inspector) Close() error

- func (i *Inspector) ClusterKeySlot(queue string) (int64, error)

- func (i *Inspector) ClusterNodes(queue string) ([]*ClusterNode, error)

- func (i *Inspector) DeleteAllAggregatingTasks(queue, group string) (int, error)

- func (i *Inspector) DeleteAllArchivedTasks(queue string) (int, error)

- func (i *Inspector) DeleteAllCompletedTasks(queue string) (int, error)

- func (i *Inspector) DeleteAllPendingTasks(queue string) (int, error)

- func (i *Inspector) DeleteAllRetryTasks(queue string) (int, error)

- func (i *Inspector) DeleteAllScheduledTasks(queue string) (int, error)

- func (i *Inspector) DeleteQueue(queue string, force bool) error

- func (i *Inspector) DeleteTask(queue, id string) error

- func (i *Inspector) GetQueueInfo(queue string) (*QueueInfo, error)

- func (i *Inspector) GetTaskInfo(queue, id string) (*TaskInfo, error)

- func (i *Inspector) Groups(queue string) ([]*GroupInfo, error)

- func (i *Inspector) History(queue string, n int) ([]*DailyStats, error)

- func (i *Inspector) ListActiveTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListAggregatingTasks(queue, group string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListArchivedTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListCompletedTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListPendingTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListRetryTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListScheduledTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

- func (i *Inspector) ListSchedulerEnqueueEvents(entryID string, opts ...ListOption) ([]*SchedulerEnqueueEvent, error)

- func (i *Inspector) PauseQueue(queue string) error

- func (i *Inspector) Queues() ([]string, error)

- func (i *Inspector) RunAllAggregatingTasks(queue, group string) (int, error)

- func (i *Inspector) RunAllArchivedTasks(queue string) (int, error)

- func (i *Inspector) RunAllRetryTasks(queue string) (int, error)

- func (i *Inspector) RunAllScheduledTasks(queue string) (int, error)

- func (i *Inspector) RunTask(queue, id string) error

- func (i *Inspector) SchedulerEntries() ([]*SchedulerEntry, error)

- func (i *Inspector) Servers() ([]*ServerInfo, error)

- func (i *Inspector) UnpauseQueue(queue string) error

- type ListOption

- type LogLevel

- type Logger

- type MiddlewareFunc

- type Option

- func Deadline(t time.Time) Option

- func Group(name string) Option

- func MaxRetry(n int) Option

- func ProcessAt(t time.Time) Option

- func ProcessIn(d time.Duration) Option

- func Queue(name string) Option

- func Retention(d time.Duration) Option

- func TaskID(id string) Option

- func Timeout(d time.Duration) Option

- func Unique(ttl time.Duration) Option

- type OptionType

- type PeriodicTaskConfig

- type PeriodicTaskConfigProvider

- type PeriodicTaskManager

- type PeriodicTaskManagerOpts

- type QueueInfo

- type RedisClientOpt

- type RedisClusterClientOpt

- type RedisConnOpt

- type RedisFailoverClientOpt

- type ResultWriter

- type RetryDelayFunc

- type Scheduler

- type SchedulerEnqueueEvent

- type SchedulerEntry

- type SchedulerOpts

- type ServeMux

- func (mux *ServeMux) Handle(pattern string, handler Handler)

- func (mux *ServeMux) HandleFunc(pattern string, handler func(context.Context, *Task) error)

- func (mux *ServeMux) Handler(t *Task) (h Handler, pattern string)

- func (mux *ServeMux) ProcessTask(ctx context.Context, task *Task) error

- func (mux *ServeMux) Use(mws ...MiddlewareFunc)

- type Server

- type ServerInfo

- type Task

- type TaskInfo

- type TaskState

- type WorkerInfo

Examples ¶

Constants ¶

This section is empty.

Variables ¶

var ( // ErrQueueNotFound indicates that the specified queue does not exist. ErrQueueNotFound = errors.New("queue not found") // ErrQueueNotEmpty indicates that the specified queue is not empty. ErrQueueNotEmpty = errors.New("queue is not empty") // ErrTaskNotFound indicates that the specified task cannot be found in the queue. ErrTaskNotFound = errors.New("task not found") )

var ErrDuplicateTask = errors.New("task already exists")

ErrDuplicateTask indicates that the given task could not be enqueued since it's a duplicate of another task.

ErrDuplicateTask error only applies to tasks enqueued with a Unique option.

var ErrLeaseExpired = errors.New("asynq: task lease expired")

ErrLeaseExpired error indicates that the task failed because the worker working on the task could not extend its lease due to missing heartbeats. The worker may have crashed or got cutoff from the network.

var ErrServerClosed = errors.New("asynq: Server closed")

ErrServerClosed indicates that the operation is now illegal because of the server has been shutdown.

var ErrTaskIDConflict = errors.New("task ID conflicts with another task")

ErrTaskIDConflict indicates that the given task could not be enqueued since its task ID already exists.

ErrTaskIDConflict error only applies to tasks enqueued with a TaskID option.

var SkipRetry = errors.New("skip retry for the task")

SkipRetry is used as a return value from Handler.ProcessTask to indicate that the task should not be retried and should be archived instead.

Functions ¶

func DefaultRetryDelayFunc ¶ added in v0.14.0

DefaultRetryDelayFunc is the default RetryDelayFunc used if one is not specified in Config. It uses exponential back-off strategy to calculate the retry delay.

func GetMaxRetry ¶ added in v0.9.1

GetMaxRetry extracts maximum retry from a context, if any.

Return value n indicates the maximum number of times the associated task can be retried if ProcessTask returns a non-nil error.

func GetQueueName ¶ added in v0.12.0

GetQueueName extracts queue name from a context, if any.

Return value queue indicates which queue the task was pulled from.

func GetRetryCount ¶ added in v0.9.1

GetRetryCount extracts retry count from a context, if any.

Return value n indicates the number of times associated task has been retried so far.

Types ¶

type Client ¶

type Client struct {

// contains filtered or unexported fields

}

A Client is responsible for scheduling tasks.

A Client is used to register tasks that should be processed immediately or some time in the future.

Clients are safe for concurrent use by multiple goroutines.

func NewClient ¶

func NewClient(r RedisConnOpt) *Client

NewClient returns a new Client instance given a redis connection option.

func (*Client) Enqueue ¶ added in v0.5.0

Enqueue enqueues the given task to a queue.

Enqueue returns TaskInfo and nil error if the task is enqueued successfully, otherwise returns a non-nil error.

The argument opts specifies the behavior of task processing. If there are conflicting Option values the last one overrides others. Any options provided to NewTask can be overridden by options passed to Enqueue. By default, max retry is set to 25 and timeout is set to 30 minutes.

If no ProcessAt or ProcessIn options are provided, the task will be pending immediately.

Enqueue uses context.Background internally; to specify the context, use EnqueueContext.

func (*Client) EnqueueContext ¶ added in v0.19.1

EnqueueContext enqueues the given task to a queue.

EnqueueContext returns TaskInfo and nil error if the task is enqueued successfully, otherwise returns a non-nil error.

The argument opts specifies the behavior of task processing. If there are conflicting Option values the last one overrides others. Any options provided to NewTask can be overridden by options passed to Enqueue. By default, max retry is set to 25 and timeout is set to 30 minutes.

If no ProcessAt or ProcessIn options are provided, the task will be pending immediately.

The first argument context applies to the enqueue operation. To specify task timeout and deadline, use Timeout and Deadline option instead.

type ClusterNode ¶ added in v0.12.0

type ClusterNode struct {

// Node ID in the cluster.

ID string

// Address of the node.

Addr string

}

ClusterNode describes a node in redis cluster.

type Config ¶

type Config struct {

// Maximum number of concurrent processing of tasks.

//

// If set to a zero or negative value, NewServer will overwrite the value

// to the number of CPUs usable by the current process.

Concurrency int

// BaseContext optionally specifies a function that returns the base context for Handler invocations on this server.

//

// If BaseContext is nil, the default is context.Background().

// If this is defined, then it MUST return a non-nil context

BaseContext func() context.Context

// Function to calculate retry delay for a failed task.

//

// By default, it uses exponential backoff algorithm to calculate the delay.

RetryDelayFunc RetryDelayFunc

// Predicate function to determine whether the error returned from Handler is a failure.

// If the function returns false, Server will not increment the retried counter for the task,

// and Server won't record the queue stats (processed and failed stats) to avoid skewing the error

// rate of the queue.

//

// By default, if the given error is non-nil the function returns true.

IsFailure func(error) bool

// List of queues to process with given priority value. Keys are the names of the

// queues and values are associated priority value.

//

// If set to nil or not specified, the server will process only the "default" queue.

//

// Priority is treated as follows to avoid starving low priority queues.

//

// Example:

//

// Queues: map[string]int{

// "critical": 6,

// "default": 3,

// "low": 1,

// }

//

// With the above config and given that all queues are not empty, the tasks

// in "critical", "default", "low" should be processed 60%, 30%, 10% of

// the time respectively.

//

// If a queue has a zero or negative priority value, the queue will be ignored.

Queues map[string]int

// StrictPriority indicates whether the queue priority should be treated strictly.

//

// If set to true, tasks in the queue with the highest priority is processed first.

// The tasks in lower priority queues are processed only when those queues with

// higher priorities are empty.

StrictPriority bool

// ErrorHandler handles errors returned by the task handler.

//

// HandleError is invoked only if the task handler returns a non-nil error.

//

// Example:

//

// func reportError(ctx context, task *asynq.Task, err error) {

// retried, _ := asynq.GetRetryCount(ctx)

// maxRetry, _ := asynq.GetMaxRetry(ctx)

// if retried >= maxRetry {

// err = fmt.Errorf("retry exhausted for task %s: %w", task.Type, err)

// }

// errorReportingService.Notify(err)

// })

//

// ErrorHandler: asynq.ErrorHandlerFunc(reportError)

ErrorHandler ErrorHandler

// Logger specifies the logger used by the server instance.

//

// If unset, default logger is used.

Logger Logger

// LogLevel specifies the minimum log level to enable.

//

// If unset, InfoLevel is used by default.

LogLevel LogLevel

// ShutdownTimeout specifies the duration to wait to let workers finish their tasks

// before forcing them to abort when stopping the server.

//

// If unset or zero, default timeout of 8 seconds is used.

ShutdownTimeout time.Duration

// HealthCheckFunc is called periodically with any errors encountered during ping to the

// connected redis server.

HealthCheckFunc func(error)

// HealthCheckInterval specifies the interval between healthchecks.

//

// If unset or zero, the interval is set to 15 seconds.

HealthCheckInterval time.Duration

// DelayedTaskCheckInterval specifies the interval between checks run on 'scheduled' and 'retry'

// tasks, and forwarding them to 'pending' state if they are ready to be processed.

//

// If unset or zero, the interval is set to 5 seconds.

DelayedTaskCheckInterval time.Duration

// GroupGracePeriod specifies the amount of time the server will wait for an incoming task before aggregating

// the tasks in a group. If an incoming task is received within this period, the server will wait for another

// period of the same length, up to GroupMaxDelay if specified.

//

// If unset or zero, the grace period is set to 1 minute.

// Minimum duration for GroupGracePeriod is 1 second. If value specified is less than a second, the call to

// NewServer will panic.

GroupGracePeriod time.Duration

// GroupMaxDelay specifies the maximum amount of time the server will wait for incoming tasks before aggregating

// the tasks in a group.

//

// If unset or zero, no delay limit is used.

GroupMaxDelay time.Duration

// GroupMaxSize specifies the maximum number of tasks that can be aggregated into a single task within a group.

// If GroupMaxSize is reached, the server will aggregate the tasks into one immediately.

//

// If unset or zero, no size limit is used.

GroupMaxSize int

// GroupAggregator specifies the aggregation function used to aggregate multiple tasks in a group into one task.

//

// If unset or nil, the group aggregation feature will be disabled on the server.

GroupAggregator GroupAggregator

}

Config specifies the server's background-task processing behavior.

type DailyStats ¶ added in v0.11.0

type DailyStats struct {

// Name of the queue.

Queue string

// Total number of tasks being processed during the given date.

// The number includes both succeeded and failed tasks.

Processed int

// Total number of tasks failed to be processed during the given date.

Failed int

// Date this stats was taken.

Date time.Time

}

DailyStats holds aggregate data for a given day for a given queue.

type ErrorHandler ¶ added in v0.6.0

An ErrorHandler handles an error occurred during task processing.

type ErrorHandlerFunc ¶ added in v0.6.0

The ErrorHandlerFunc type is an adapter to allow the use of ordinary functions as a ErrorHandler. If f is a function with the appropriate signature, ErrorHandlerFunc(f) is a ErrorHandler that calls f.

func (ErrorHandlerFunc) HandleError ¶ added in v0.6.0

func (fn ErrorHandlerFunc) HandleError(ctx context.Context, task *Task, err error)

HandleError calls fn(ctx, task, err)

type GroupAggregator ¶ added in v0.23.0

type GroupAggregator interface {

// Aggregate aggregates the given tasks in a group with the given group name,

// and returns a new task which is the aggregation of those tasks.

//

// Use NewTask(typename, payload, opts...) to set any options for the aggregated task.

// The Queue option, if provided, will be ignored and the aggregated task will always be enqueued

// to the same queue the group belonged.

Aggregate(group string, tasks []*Task) *Task

}

GroupAggregator aggregates a group of tasks into one before the tasks are passed to the Handler.

type GroupAggregatorFunc ¶ added in v0.23.0

The GroupAggregatorFunc type is an adapter to allow the use of ordinary functions as a GroupAggregator. If f is a function with the appropriate signature, GroupAggregatorFunc(f) is a GroupAggregator that calls f.

type GroupInfo ¶ added in v0.23.0

type GroupInfo struct {

// Name of the group.

Group string

// Size is the total number of tasks in the group.

Size int

}

GroupInfo represents a state of a group at a certain time.

type Handler ¶

A Handler processes tasks.

ProcessTask should return nil if the processing of a task is successful.

If ProcessTask returns a non-nil error or panics, the task will be retried after delay if retry-count is remaining, otherwise the task will be archived.

One exception to this rule is when ProcessTask returns a SkipRetry error. If the returned error is SkipRetry or an error wraps SkipRetry, retry is skipped and the task will be immediately archived instead.

func NotFoundHandler ¶ added in v0.6.0

func NotFoundHandler() Handler

NotFoundHandler returns a simple task handler that returns a “not found“ error.

type HandlerFunc ¶

The HandlerFunc type is an adapter to allow the use of ordinary functions as a Handler. If f is a function with the appropriate signature, HandlerFunc(f) is a Handler that calls f.

func (HandlerFunc) ProcessTask ¶

func (fn HandlerFunc) ProcessTask(ctx context.Context, task *Task) error

ProcessTask calls fn(ctx, task)

type Inspector ¶ added in v0.11.0

type Inspector struct {

// contains filtered or unexported fields

}

Inspector is a client interface to inspect and mutate the state of queues and tasks.

func NewInspector ¶ added in v0.11.0

func NewInspector(r RedisConnOpt) *Inspector

New returns a new instance of Inspector.

func (*Inspector) ArchiveAllAggregatingTasks ¶ added in v0.23.0

ArchiveAllAggregatingTasks archives all tasks from the given group, and reports the number of tasks archived.

func (*Inspector) ArchiveAllPendingTasks ¶ added in v0.18.0

ArchiveAllPendingTasks archives all pending tasks from the given queue, and reports the number of tasks archived.

func (*Inspector) ArchiveAllRetryTasks ¶ added in v0.14.0

ArchiveAllRetryTasks archives all retry tasks from the given queue, and reports the number of tasks archiveed.

func (*Inspector) ArchiveAllScheduledTasks ¶ added in v0.14.0

ArchiveAllScheduledTasks archives all scheduled tasks from the given queue, and reports the number of tasks archiveed.

func (*Inspector) ArchiveTask ¶ added in v0.18.0

ArchiveTask archives a task with the given id in the given queue. The task needs to be in pending, scheduled, or retry state, otherwise ArchiveTask will return an error.

If a queue with the given name doesn't exist, it returns an error wrapping ErrQueueNotFound. If a task with the given id doesn't exist in the queue, it returns an error wrapping ErrTaskNotFound. If the task is in already archived, it returns a non-nil error.

func (*Inspector) CancelProcessing ¶ added in v0.18.0

CancelProcessing sends a signal to cancel processing of the task given a task id. CancelProcessing is best-effort, which means that it does not guarantee that the task with the given id will be canceled. The return value only indicates whether the cancelation signal has been sent.

func (*Inspector) ClusterKeySlot ¶ added in v0.12.0

ClusterKeySlot returns an integer identifying the hash slot the given queue hashes to.

func (*Inspector) ClusterNodes ¶ added in v0.12.0

func (i *Inspector) ClusterNodes(queue string) ([]*ClusterNode, error)

ClusterNodes returns a list of nodes the given queue belongs to.

Only relevant if task queues are stored in redis cluster.

func (*Inspector) DeleteAllAggregatingTasks ¶ added in v0.23.0

DeleteAllAggregatingTasks deletes all tasks from the specified group, and reports the number of tasks deleted.

func (*Inspector) DeleteAllArchivedTasks ¶ added in v0.14.0

DeleteAllArchivedTasks deletes all archived tasks from the specified queue, and reports the number tasks deleted.

func (*Inspector) DeleteAllCompletedTasks ¶ added in v0.19.0

DeleteAllCompletedTasks deletes all completed tasks from the specified queue, and reports the number tasks deleted.

func (*Inspector) DeleteAllPendingTasks ¶ added in v0.18.0

DeleteAllPendingTasks deletes all pending tasks from the specified queue, and reports the number tasks deleted.

func (*Inspector) DeleteAllRetryTasks ¶ added in v0.11.0

DeleteAllRetryTasks deletes all retry tasks from the specified queue, and reports the number tasks deleted.

func (*Inspector) DeleteAllScheduledTasks ¶ added in v0.11.0

DeleteAllScheduledTasks deletes all scheduled tasks from the specified queue, and reports the number tasks deleted.

func (*Inspector) DeleteQueue ¶ added in v0.14.0

DeleteQueue removes the specified queue.

If force is set to true, DeleteQueue will remove the queue regardless of the queue size as long as no tasks are active in the queue. If force is set to false, DeleteQueue will remove the queue only if the queue is empty.

If the specified queue does not exist, DeleteQueue returns ErrQueueNotFound. If force is set to false and the specified queue is not empty, DeleteQueue returns ErrQueueNotEmpty.

func (*Inspector) DeleteTask ¶ added in v0.18.0

DeleteTask deletes a task with the given id from the given queue. The task needs to be in pending, scheduled, retry, or archived state, otherwise DeleteTask will return an error.

If a queue with the given name doesn't exist, it returns an error wrapping ErrQueueNotFound. If a task with the given id doesn't exist in the queue, it returns an error wrapping ErrTaskNotFound. If the task is in active state, it returns a non-nil error.

func (*Inspector) GetQueueInfo ¶ added in v0.18.0

GetQueueInfo returns current information of the given queue.

func (*Inspector) GetTaskInfo ¶ added in v0.18.0

GetTaskInfo retrieves task information given a task id and queue name.

Returns an error wrapping ErrQueueNotFound if a queue with the given name doesn't exist. Returns an error wrapping ErrTaskNotFound if a task with the given id doesn't exist in the queue.

func (*Inspector) Groups ¶ added in v0.23.0

Groups returns a list of all groups within the given queue.

func (*Inspector) History ¶ added in v0.11.0

func (i *Inspector) History(queue string, n int) ([]*DailyStats, error)

History returns a list of stats from the last n days.

func (*Inspector) ListActiveTasks ¶ added in v0.12.0

func (i *Inspector) ListActiveTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

ListActiveTasks retrieves active tasks from the specified queue.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListAggregatingTasks ¶ added in v0.23.0

func (i *Inspector) ListAggregatingTasks(queue, group string, opts ...ListOption) ([]*TaskInfo, error)

ListAggregatingTasks retrieves scheduled tasks from the specified group.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListArchivedTasks ¶ added in v0.14.0

func (i *Inspector) ListArchivedTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

ListArchivedTasks retrieves archived tasks from the specified queue. Tasks are sorted by LastFailedAt in descending order.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListCompletedTasks ¶ added in v0.19.0

func (i *Inspector) ListCompletedTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

ListCompletedTasks retrieves completed tasks from the specified queue. Tasks are sorted by expiration time (i.e. CompletedAt + Retention) in descending order.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListPendingTasks ¶ added in v0.12.0

func (i *Inspector) ListPendingTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

ListPendingTasks retrieves pending tasks from the specified queue.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListRetryTasks ¶ added in v0.11.0

func (i *Inspector) ListRetryTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

ListRetryTasks retrieves retry tasks from the specified queue. Tasks are sorted by NextProcessAt in ascending order.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListScheduledTasks ¶ added in v0.11.0

func (i *Inspector) ListScheduledTasks(queue string, opts ...ListOption) ([]*TaskInfo, error)

ListScheduledTasks retrieves scheduled tasks from the specified queue. Tasks are sorted by NextProcessAt in ascending order.

By default, it retrieves the first 30 tasks.

func (*Inspector) ListSchedulerEnqueueEvents ¶ added in v0.14.0

func (i *Inspector) ListSchedulerEnqueueEvents(entryID string, opts ...ListOption) ([]*SchedulerEnqueueEvent, error)

ListSchedulerEnqueueEvents retrieves a list of enqueue events from the specified scheduler entry.

By default, it retrieves the first 30 tasks.

func (*Inspector) PauseQueue ¶ added in v0.11.0

PauseQueue pauses task processing on the specified queue. If the queue is already paused, it will return a non-nil error.

func (*Inspector) RunAllAggregatingTasks ¶ added in v0.23.0

RunAllAggregatingTasks schedules all tasks from the given grou to run. and reports the number of tasks scheduled to run.

func (*Inspector) RunAllArchivedTasks ¶ added in v0.14.0

RunAllArchivedTasks schedules all archived tasks from the given queue to run, and reports the number of tasks scheduled to run.

func (*Inspector) RunAllRetryTasks ¶ added in v0.12.0

RunAllRetryTasks schedules all retry tasks from the given queue to run, and reports the number of tasks scheduled to run.

func (*Inspector) RunAllScheduledTasks ¶ added in v0.12.0

RunAllScheduledTasks schedules all scheduled tasks from the given queue to run, and reports the number of tasks scheduled to run.

func (*Inspector) RunTask ¶ added in v0.18.0

RunTask updates the task to pending state given a queue name and task id. The task needs to be in scheduled, retry, or archived state, otherwise RunTask will return an error.

If a queue with the given name doesn't exist, it returns an error wrapping ErrQueueNotFound. If a task with the given id doesn't exist in the queue, it returns an error wrapping ErrTaskNotFound. If the task is in pending or active state, it returns a non-nil error.

func (*Inspector) SchedulerEntries ¶ added in v0.14.0

func (i *Inspector) SchedulerEntries() ([]*SchedulerEntry, error)

SchedulerEntries returns a list of all entries registered with currently running schedulers.

func (*Inspector) Servers ¶ added in v0.14.0

func (i *Inspector) Servers() ([]*ServerInfo, error)

Servers return a list of running servers' information.

func (*Inspector) UnpauseQueue ¶ added in v0.11.0

UnpauseQueue resumes task processing on the specified queue. If the queue is not paused, it will return a non-nil error.

type ListOption ¶ added in v0.11.0

type ListOption interface{}

ListOption specifies behavior of list operation.

func Page ¶ added in v0.11.0

func Page(n int) ListOption

Page returns an option to specify the page number for list operation. The value 1 fetches the first page.

Negative page number is treated as one.

func PageSize ¶ added in v0.11.0

func PageSize(n int) ListOption

PageSize returns an option to specify the page size for list operation.

Negative page size is treated as zero.

type LogLevel ¶ added in v0.9.0

type LogLevel int32

LogLevel represents logging level.

It satisfies flag.Value interface.

const ( // DebugLevel is the lowest level of logging. // Debug logs are intended for debugging and development purposes. DebugLevel LogLevel // InfoLevel is used for general informational log messages. InfoLevel // WarnLevel is used for undesired but relatively expected events, // which may indicate a problem. WarnLevel // ErrorLevel is used for undesired and unexpected events that // the program can recover from. ErrorLevel // FatalLevel is used for undesired and unexpected events that // the program cannot recover from. FatalLevel )

type Logger ¶ added in v0.6.1

type Logger interface {

// Debug logs a message at Debug level.

Debug(args ...interface{})

// Info logs a message at Info level.

Info(args ...interface{})

// Warn logs a message at Warning level.

Warn(args ...interface{})

// Error logs a message at Error level.

Error(args ...interface{})

// Fatal logs a message at Fatal level

// and process will exit with status set to 1.

Fatal(args ...interface{})

}

Logger supports logging at various log levels.

type MiddlewareFunc ¶ added in v0.6.2

MiddlewareFunc is a function which receives an asynq.Handler and returns another asynq.Handler. Typically, the returned handler is a closure which does something with the context and task passed to it, and then calls the handler passed as parameter to the MiddlewareFunc.

type Option ¶

type Option interface {

// String returns a string representation of the option.

String() string

// Type describes the type of the option.

Type() OptionType

// Value returns a value used to create this option.

Value() interface{}

}

Option specifies the task processing behavior.

func Deadline ¶ added in v0.6.1

Deadline returns an option to specify the deadline for the given task. If it reaches the deadline before the Handler returns, then the task will be retried.

If there's a conflicting Timeout option, whichever comes earliest will be used.

func Group ¶ added in v0.23.0

Group returns an option to specify the group used for the task. Tasks in a given queue with the same group will be aggregated into one task before passed to Handler.

func MaxRetry ¶

MaxRetry returns an option to specify the max number of times the task will be retried.

Negative retry count is treated as zero retry.

func ProcessAt ¶ added in v0.12.0

ProcessAt returns an option to specify when to process the given task.

If there's a conflicting ProcessIn option, the last option passed to Enqueue overrides the others.

func ProcessIn ¶ added in v0.12.0

ProcessIn returns an option to specify when to process the given task relative to the current time.

If there's a conflicting ProcessAt option, the last option passed to Enqueue overrides the others.

func Retention ¶ added in v0.19.0

Retention returns an option to specify the duration of retention period for the task. If this option is provided, the task will be stored as a completed task after successful processing. A completed task will be deleted after the specified duration elapses.

func Timeout ¶ added in v0.4.0

Timeout returns an option to specify how long a task may run. If the timeout elapses before the Handler returns, then the task will be retried.

Zero duration means no limit.

If there's a conflicting Deadline option, whichever comes earliest will be used.

func Unique ¶ added in v0.7.0

Unique returns an option to enqueue a task only if the given task is unique. Task enqueued with this option is guaranteed to be unique within the given ttl. Once the task gets processed successfully or once the TTL has expired, another task with the same uniqueness may be enqueued. ErrDuplicateTask error is returned when enqueueing a duplicate task. TTL duration must be greater than or equal to 1 second.

Uniqueness of a task is based on the following properties:

- Task Type

- Task Payload

- Queue Name

type OptionType ¶ added in v0.13.0

type OptionType int

const ( MaxRetryOpt OptionType = iota QueueOpt TimeoutOpt DeadlineOpt UniqueOpt ProcessAtOpt ProcessInOpt TaskIDOpt RetentionOpt GroupOpt )

type PeriodicTaskConfig ¶ added in v0.21.0

type PeriodicTaskConfig struct {

Cronspec string // required: must be non empty string

Task *Task // required: must be non nil

Opts []Option // optional: can be nil

}

PeriodicTaskConfig specifies the details of a periodic task.

type PeriodicTaskConfigProvider ¶ added in v0.21.0

type PeriodicTaskConfigProvider interface {

GetConfigs() ([]*PeriodicTaskConfig, error)

}

PeriodicTaskConfigProvider provides configs for periodic tasks. GetConfigs will be called by a PeriodicTaskManager periodically to sync the scheduler's entries with the configs returned by the provider.

type PeriodicTaskManager ¶ added in v0.21.0

type PeriodicTaskManager struct {

// contains filtered or unexported fields

}

PeriodicTaskManager manages scheduling of periodic tasks. It syncs scheduler's entries by calling the config provider periodically.

func NewPeriodicTaskManager ¶ added in v0.21.0

func NewPeriodicTaskManager(opts PeriodicTaskManagerOpts) (*PeriodicTaskManager, error)

NewPeriodicTaskManager returns a new PeriodicTaskManager instance. The given opts should specify the RedisConnOp and PeriodicTaskConfigProvider at minimum.

func (*PeriodicTaskManager) Run ¶ added in v0.21.0

func (mgr *PeriodicTaskManager) Run() error

Run starts the manager and blocks until an os signal to exit the program is received. Once it receives a signal, it gracefully shuts down the manager.

func (*PeriodicTaskManager) Shutdown ¶ added in v0.21.0

func (mgr *PeriodicTaskManager) Shutdown()

Shutdown gracefully shuts down the manager. It notifies a background syncer goroutine to stop and stops scheduler.

func (*PeriodicTaskManager) Start ¶ added in v0.21.0

func (mgr *PeriodicTaskManager) Start() error

Start starts a scheduler and background goroutine to sync the scheduler with the configs returned by the provider.

Start returns any error encountered at start up time.

type PeriodicTaskManagerOpts ¶ added in v0.21.0

type PeriodicTaskManagerOpts struct {

// Required: must be non nil

PeriodicTaskConfigProvider PeriodicTaskConfigProvider

// Required: must be non nil

RedisConnOpt RedisConnOpt

// Optional: scheduler options

*SchedulerOpts

// Optional: default is 3m

SyncInterval time.Duration

}

type QueueInfo ¶ added in v0.11.0

type QueueInfo struct {

// Name of the queue.

Queue string

// Total number of bytes that the queue and its tasks require to be stored in redis.

// It is an approximate memory usage value in bytes since the value is computed by sampling.

MemoryUsage int64

// Latency of the queue, measured by the oldest pending task in the queue.

Latency time.Duration

// Size is the total number of tasks in the queue.

// The value is the sum of Pending, Active, Scheduled, Retry, Aggregating and Archived.

Size int

// Groups is the total number of groups in the queue.

Groups int

// Number of pending tasks.

Pending int

// Number of active tasks.

Active int

// Number of scheduled tasks.

Scheduled int

// Number of retry tasks.

Retry int

// Number of archived tasks.

Archived int

// Number of stored completed tasks.

Completed int

// Number of aggregating tasks.

Aggregating int

// Total number of tasks being processed within the given date (counter resets daily).

// The number includes both succeeded and failed tasks.

Processed int

// Total number of tasks failed to be processed within the given date (counter resets daily).

Failed int

// Total number of tasks processed (cumulative).

ProcessedTotal int

// Total number of tasks failed (cumulative).

FailedTotal int

// Paused indicates whether the queue is paused.

// If true, tasks in the queue will not be processed.

Paused bool

// Time when this queue info snapshot was taken.

Timestamp time.Time

}

QueueInfo represents a state of a queue at a certain time.

type RedisClientOpt ¶ added in v0.2.0

type RedisClientOpt struct {

// Network type to use, either tcp or unix.

// Default is tcp.

Network string

// Redis server address in "host:port" format.

Addr string

// Username to authenticate the current connection when Redis ACLs are used.

// See: https://redis.io/commands/auth.

Username string

// Password to authenticate the current connection.

// See: https://redis.io/commands/auth.

Password string

// Redis DB to select after connecting to a server.

// See: https://redis.io/commands/select.

DB int

// Dial timeout for establishing new connections.

// Default is 5 seconds.

DialTimeout time.Duration

// Timeout for socket reads.

// If timeout is reached, read commands will fail with a timeout error

// instead of blocking.

//

// Use value -1 for no timeout and 0 for default.

// Default is 3 seconds.

ReadTimeout time.Duration

// Timeout for socket writes.

// If timeout is reached, write commands will fail with a timeout error

// instead of blocking.

//

// Use value -1 for no timeout and 0 for default.

// Default is ReadTimout.

WriteTimeout time.Duration

// Maximum number of socket connections.

// Default is 10 connections per every CPU as reported by runtime.NumCPU.

PoolSize int

// TLS Config used to connect to a server.

// TLS will be negotiated only if this field is set.

TLSConfig *tls.Config

}

RedisClientOpt is used to create a redis client that connects to a redis server directly.

func (RedisClientOpt) MakeRedisClient ¶ added in v0.15.0

func (opt RedisClientOpt) MakeRedisClient() interface{}

type RedisClusterClientOpt ¶ added in v0.12.0

type RedisClusterClientOpt struct {

// A seed list of host:port addresses of cluster nodes.

Addrs []string

// The maximum number of retries before giving up.

// Command is retried on network errors and MOVED/ASK redirects.

// Default is 8 retries.

MaxRedirects int

// Username to authenticate the current connection when Redis ACLs are used.

// See: https://redis.io/commands/auth.

Username string

// Password to authenticate the current connection.

// See: https://redis.io/commands/auth.

Password string

// Dial timeout for establishing new connections.

// Default is 5 seconds.

DialTimeout time.Duration

// Timeout for socket reads.

// If timeout is reached, read commands will fail with a timeout error

// instead of blocking.

//

// Use value -1 for no timeout and 0 for default.

// Default is 3 seconds.

ReadTimeout time.Duration

// Timeout for socket writes.

// If timeout is reached, write commands will fail with a timeout error

// instead of blocking.

//

// Use value -1 for no timeout and 0 for default.

// Default is ReadTimeout.

WriteTimeout time.Duration

// TLS Config used to connect to a server.

// TLS will be negotiated only if this field is set.

TLSConfig *tls.Config

}

RedisClusterClientOpt is used to creates a redis client that connects to redis cluster.

func (RedisClusterClientOpt) MakeRedisClient ¶ added in v0.15.0

func (opt RedisClusterClientOpt) MakeRedisClient() interface{}

type RedisConnOpt ¶ added in v0.2.0

type RedisConnOpt interface {

// MakeRedisClient returns a new redis client instance.

// Return value is intentionally opaque to hide the implementation detail of redis client.

MakeRedisClient() interface{}

}

RedisConnOpt is a discriminated union of types that represent Redis connection configuration option.

RedisConnOpt represents a sum of following types:

- RedisClientOpt

- RedisFailoverClientOpt

- RedisClusterClientOpt

func ParseRedisURI ¶ added in v0.8.1

func ParseRedisURI(uri string) (RedisConnOpt, error)

ParseRedisURI parses redis uri string and returns RedisConnOpt if uri is valid. It returns a non-nil error if uri cannot be parsed.

Three URI schemes are supported, which are redis:, rediss:, redis-socket:, and redis-sentinel:. Supported formats are:

redis://[:password@]host[:port][/dbnumber] rediss://[:password@]host[:port][/dbnumber] redis-socket://[:password@]path[?db=dbnumber] redis-sentinel://[:password@]host1[:port][,host2:[:port]][,hostN:[:port]][?master=masterName]

Example ¶

package main

import (

"fmt"

"log"

"github.com/hibiken/asynq"

)

func main() {

rconn, err := asynq.ParseRedisURI("redis://localhost:6379/10")

if err != nil {

log.Fatal(err)

}

r, ok := rconn.(asynq.RedisClientOpt)

if !ok {

log.Fatal("unexpected type")

}

fmt.Println(r.Addr)

fmt.Println(r.DB)

}

Output: localhost:6379 10

type RedisFailoverClientOpt ¶ added in v0.2.0

type RedisFailoverClientOpt struct {

// Redis master name that monitored by sentinels.

MasterName string

// Addresses of sentinels in "host:port" format.

// Use at least three sentinels to avoid problems described in

// https://redis.io/topics/sentinel.

SentinelAddrs []string

// Redis sentinel password.

SentinelPassword string

// Username to authenticate the current connection when Redis ACLs are used.

// See: https://redis.io/commands/auth.

Username string

// Password to authenticate the current connection.

// See: https://redis.io/commands/auth.

Password string

// Redis DB to select after connecting to a server.

// See: https://redis.io/commands/select.

DB int

// Dial timeout for establishing new connections.

// Default is 5 seconds.

DialTimeout time.Duration

// Timeout for socket reads.

// If timeout is reached, read commands will fail with a timeout error

// instead of blocking.

//

// Use value -1 for no timeout and 0 for default.

// Default is 3 seconds.

ReadTimeout time.Duration

// Timeout for socket writes.

// If timeout is reached, write commands will fail with a timeout error

// instead of blocking.

//

// Use value -1 for no timeout and 0 for default.

// Default is ReadTimeout

WriteTimeout time.Duration

// Maximum number of socket connections.

// Default is 10 connections per every CPU as reported by runtime.NumCPU.

PoolSize int

// TLS Config used to connect to a server.

// TLS will be negotiated only if this field is set.

TLSConfig *tls.Config

}

RedisFailoverClientOpt is used to creates a redis client that talks to redis sentinels for service discovery and has an automatic failover capability.

func (RedisFailoverClientOpt) MakeRedisClient ¶ added in v0.15.0

func (opt RedisFailoverClientOpt) MakeRedisClient() interface{}

type ResultWriter ¶ added in v0.19.0

type ResultWriter struct {

// contains filtered or unexported fields

}

ResultWriter is a client interface to write result data for a task. It writes the data to the redis instance the server is connected to.

Example ¶

package main

import (

"context"

"fmt"

"log"

"github.com/hibiken/asynq"

)

func main() {

// ResultWriter is only accessible in Handler.

h := func(ctx context.Context, task *asynq.Task) error {

// .. do task processing work

res := []byte("task result data")

n, err := task.ResultWriter().Write(res) // implements io.Writer

if err != nil {

return fmt.Errorf("failed to write task result: %v", err)

}

log.Printf(" %d bytes written", n)

return nil

}

_ = h

}

Output:

func (*ResultWriter) TaskID ¶ added in v0.19.0

func (w *ResultWriter) TaskID() string

TaskID returns the ID of the task the ResultWriter is associated with.

type RetryDelayFunc ¶ added in v0.14.0

RetryDelayFunc calculates the retry delay duration for a failed task given the retry count, error, and the task.

n is the number of times the task has been retried. e is the error returned by the task handler. t is the task in question.

type Scheduler ¶ added in v0.13.0

type Scheduler struct {

// contains filtered or unexported fields

}

A Scheduler kicks off tasks at regular intervals based on the user defined schedule.

Schedulers are safe for concurrent use by multiple goroutines.

Example ¶

package main

import (

"log"

"time"

"github.com/hibiken/asynq"

)

func main() {

scheduler := asynq.NewScheduler(

asynq.RedisClientOpt{Addr: ":6379"},

&asynq.SchedulerOpts{Location: time.Local},

)

if _, err := scheduler.Register("* * * * *", asynq.NewTask("task1", nil)); err != nil {

log.Fatal(err)

}

if _, err := scheduler.Register("@every 30s", asynq.NewTask("task2", nil)); err != nil {

log.Fatal(err)

}

// Run blocks and waits for os signal to terminate the program.

if err := scheduler.Run(); err != nil {

log.Fatal(err)

}

}

Output:

func NewScheduler ¶ added in v0.13.0

func NewScheduler(r RedisConnOpt, opts *SchedulerOpts) *Scheduler

NewScheduler returns a new Scheduler instance given the redis connection option. The parameter opts is optional, defaults will be used if opts is set to nil

func (*Scheduler) Register ¶ added in v0.13.0

func (s *Scheduler) Register(cronspec string, task *Task, opts ...Option) (entryID string, err error)

Register registers a task to be enqueued on the given schedule specified by the cronspec. It returns an ID of the newly registered entry.

func (*Scheduler) Run ¶ added in v0.13.0

Run starts the scheduler until an os signal to exit the program is received. It returns an error if scheduler is already running or has been shutdown.

func (*Scheduler) Shutdown ¶ added in v0.18.0

func (s *Scheduler) Shutdown()

Shutdown stops and shuts down the scheduler.

func (*Scheduler) Start ¶ added in v0.13.0

Start starts the scheduler. It returns an error if the scheduler is already running or has been shutdown.

func (*Scheduler) Unregister ¶ added in v0.16.0

Unregister removes a registered entry by entry ID. Unregister returns a non-nil error if no entries were found for the given entryID.

type SchedulerEnqueueEvent ¶ added in v0.14.0

type SchedulerEnqueueEvent struct {

// ID of the task that was enqueued.

TaskID string

// Time the task was enqueued.

EnqueuedAt time.Time

}

SchedulerEnqueueEvent holds information about an enqueue event by a scheduler.

type SchedulerEntry ¶ added in v0.14.0

type SchedulerEntry struct {

// Identifier of this entry.

ID string

// Spec describes the schedule of this entry.

Spec string

// Periodic Task registered for this entry.

Task *Task

// Opts is the options for the periodic task.

Opts []Option

// Next shows the next time the task will be enqueued.

Next time.Time

// Prev shows the last time the task was enqueued.

// Zero time if task was never enqueued.

Prev time.Time

}

SchedulerEntry holds information about a periodic task registered with a scheduler.

type SchedulerOpts ¶ added in v0.13.0

type SchedulerOpts struct {

// Logger specifies the logger used by the scheduler instance.

//

// If unset, the default logger is used.

Logger Logger

// LogLevel specifies the minimum log level to enable.

//

// If unset, InfoLevel is used by default.

LogLevel LogLevel

// Location specifies the time zone location.

//

// If unset, the UTC time zone (time.UTC) is used.

Location *time.Location

// PreEnqueueFunc, if provided, is called before a task gets enqueued by Scheduler.

// The callback function should return quickly to not block the current thread.

PreEnqueueFunc func(task *Task, opts []Option)

// PostEnqueueFunc, if provided, is called after a task gets enqueued by Scheduler.

// The callback function should return quickly to not block the current thread.

PostEnqueueFunc func(info *TaskInfo, err error)

// Deprecated: Use PostEnqueueFunc instead

// EnqueueErrorHandler gets called when scheduler cannot enqueue a registered task

// due to an error.

EnqueueErrorHandler func(task *Task, opts []Option, err error)

}

SchedulerOpts specifies scheduler options.

type ServeMux ¶ added in v0.6.0

type ServeMux struct {

// contains filtered or unexported fields

}

ServeMux is a multiplexer for asynchronous tasks. It matches the type of each task against a list of registered patterns and calls the handler for the pattern that most closely matches the task's type name.

Longer patterns take precedence over shorter ones, so that if there are handlers registered for both "images" and "images:thumbnails", the latter handler will be called for tasks with a type name beginning with "images:thumbnails" and the former will receive tasks with type name beginning with "images".

func NewServeMux ¶ added in v0.6.0

func NewServeMux() *ServeMux

NewServeMux allocates and returns a new ServeMux.

func (*ServeMux) Handle ¶ added in v0.6.0

Handle registers the handler for the given pattern. If a handler already exists for pattern, Handle panics.

func (*ServeMux) HandleFunc ¶ added in v0.6.0

HandleFunc registers the handler function for the given pattern.

func (*ServeMux) Handler ¶ added in v0.6.0

Handler returns the handler to use for the given task. It always return a non-nil handler.

Handler also returns the registered pattern that matches the task.

If there is no registered handler that applies to the task, handler returns a 'not found' handler which returns an error.

func (*ServeMux) ProcessTask ¶ added in v0.6.0

ProcessTask dispatches the task to the handler whose pattern most closely matches the task type.

func (*ServeMux) Use ¶ added in v0.6.2

func (mux *ServeMux) Use(mws ...MiddlewareFunc)

Use appends a MiddlewareFunc to the chain. Middlewares are executed in the order that they are applied to the ServeMux.

type Server ¶ added in v0.8.0

type Server struct {

// contains filtered or unexported fields

}

Server is responsible for task processing and task lifecycle management.

Server pulls tasks off queues and processes them. If the processing of a task is unsuccessful, server will schedule it for a retry.

A task will be retried until either the task gets processed successfully or until it reaches its max retry count.

If a task exhausts its retries, it will be moved to the archive and will be kept in the archive set. Note that the archive size is finite and once it reaches its max size, oldest tasks in the archive will be deleted.

func NewServer ¶ added in v0.8.0

func NewServer(r RedisConnOpt, cfg Config) *Server

NewServer returns a new Server given a redis connection option and server configuration.

func (*Server) Run ¶ added in v0.8.0

Run starts the task processing and blocks until an os signal to exit the program is received. Once it receives a signal, it gracefully shuts down all active workers and other goroutines to process the tasks.

Run returns any error encountered at server startup time. If the server has already been shutdown, ErrServerClosed is returned.

Example ¶

package main

import (

"log"

"github.com/hibiken/asynq"

)

func main() {

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: ":6379"},

asynq.Config{Concurrency: 20},

)

h := asynq.NewServeMux()

// ... Register handlers

// Run blocks and waits for os signal to terminate the program.

if err := srv.Run(h); err != nil {

log.Fatal(err)

}

}

Output:

func (*Server) Shutdown ¶ added in v0.18.0

func (srv *Server) Shutdown()

Shutdown gracefully shuts down the server. It gracefully closes all active workers. The server will wait for active workers to finish processing tasks for duration specified in Config.ShutdownTimeout. If worker didn't finish processing a task during the timeout, the task will be pushed back to Redis.

Example ¶

package main

import (

"log"

"os"

"os/signal"

"github.com/hibiken/asynq"

"golang.org/x/sys/unix"

)

func main() {

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: ":6379"},

asynq.Config{Concurrency: 20},

)

h := asynq.NewServeMux()

// ... Register handlers

if err := srv.Start(h); err != nil {

log.Fatal(err)

}

sigs := make(chan os.Signal, 1)

signal.Notify(sigs, unix.SIGTERM, unix.SIGINT)

<-sigs // wait for termination signal

srv.Shutdown()

}

Output:

func (*Server) Start ¶ added in v0.8.0

Start starts the worker server. Once the server has started, it pulls tasks off queues and starts a worker goroutine for each task and then call Handler to process it. Tasks are processed concurrently by the workers up to the number of concurrency specified in Config.Concurrency.

Start returns any error encountered at server startup time. If the server has already been shutdown, ErrServerClosed is returned.

func (*Server) Stop ¶ added in v0.8.0

func (srv *Server) Stop()

Stop signals the server to stop pulling new tasks off queues. Stop can be used before shutting down the server to ensure that all currently active tasks are processed before server shutdown.

Stop does not shutdown the server, make sure to call Shutdown before exit.

Example ¶

package main

import (

"log"

"os"

"os/signal"

"github.com/hibiken/asynq"

"golang.org/x/sys/unix"

)

func main() {

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: ":6379"},

asynq.Config{Concurrency: 20},

)

h := asynq.NewServeMux()

// ... Register handlers

if err := srv.Start(h); err != nil {

log.Fatal(err)

}

sigs := make(chan os.Signal, 1)

signal.Notify(sigs, unix.SIGTERM, unix.SIGINT, unix.SIGTSTP)

// Handle SIGTERM, SIGINT to exit the program.

// Handle SIGTSTP to stop processing new tasks.

for {

s := <-sigs

if s == unix.SIGTSTP {

srv.Stop() // stop processing new tasks

continue

}

break // received SIGTERM or SIGINT signal

}

srv.Shutdown()

}

Output:

type ServerInfo ¶ added in v0.14.0

type ServerInfo struct {

// Unique Identifier for the server.

ID string

// Host machine on which the server is running.

Host string

// PID of the process in which the server is running.

PID int

// Server configuration details.

// See Config doc for field descriptions.

Concurrency int

Queues map[string]int

StrictPriority bool

// Time the server started.

Started time.Time

// Status indicates the status of the server.

// TODO: Update comment with more details.

Status string

// A List of active workers currently processing tasks.

ActiveWorkers []*WorkerInfo

}

ServerInfo describes a running Server instance.

type Task ¶

type Task struct {

// contains filtered or unexported fields

}

Task represents a unit of work to be performed.

func NewTask ¶ added in v0.2.0

NewTask returns a new Task given a type name and payload data. Options can be passed to configure task processing behavior.

func (*Task) ResultWriter ¶ added in v0.19.0

func (t *Task) ResultWriter() *ResultWriter

ResultWriter returns a pointer to the ResultWriter associated with the task.

Nil pointer is returned if called on a newly created task (i.e. task created by calling NewTask). Only the tasks passed to Handler.ProcessTask have a valid ResultWriter pointer.

type TaskInfo ¶ added in v0.18.0

type TaskInfo struct {

// ID is the identifier of the task.

ID string

// Queue is the name of the queue in which the task belongs.

Queue string

// Type is the type name of the task.

Type string

// Payload is the payload data of the task.

Payload []byte

// State indicates the task state.

State TaskState

// MaxRetry is the maximum number of times the task can be retried.

MaxRetry int

// Retried is the number of times the task has retried so far.

Retried int

// LastErr is the error message from the last failure.

LastErr string

// LastFailedAt is the time time of the last failure if any.

// If the task has no failures, LastFailedAt is zero time (i.e. time.Time{}).

LastFailedAt time.Time

// Timeout is the duration the task can be processed by Handler before being retried,

// zero if not specified

Timeout time.Duration

// Deadline is the deadline for the task, zero value if not specified.

Deadline time.Time

// Group is the name of the group in which the task belongs.

//

// Tasks in the same queue can be grouped together by Group name and will be aggregated into one task

// by a Server processing the queue.

//

// Empty string (default) indicates task does not belong to any groups, and no aggregation will be applied to the task.

Group string

// NextProcessAt is the time the task is scheduled to be processed,

// zero if not applicable.

NextProcessAt time.Time

// IsOrphaned describes whether the task is left in active state with no worker processing it.

// An orphaned task indicates that the worker has crashed or experienced network failures and was not able to

// extend its lease on the task.

//

// This task will be recovered by running a server against the queue the task is in.

// This field is only applicable to tasks with TaskStateActive.

IsOrphaned bool

// Retention is duration of the retention period after the task is successfully processed.

Retention time.Duration

// CompletedAt is the time when the task is processed successfully.

// Zero value (i.e. time.Time{}) indicates no value.

CompletedAt time.Time

// Result holds the result data associated with the task.

// Use ResultWriter to write result data from the Handler.

Result []byte

}

A TaskInfo describes a task and its metadata.

type TaskState ¶ added in v0.18.0

type TaskState int

TaskState denotes the state of a task.

const ( // Indicates that the task is currently being processed by Handler. TaskStateActive TaskState = iota + 1 // Indicates that the task is ready to be processed by Handler. TaskStatePending // Indicates that the task is scheduled to be processed some time in the future. TaskStateScheduled // Indicates that the task has previously failed and scheduled to be processed some time in the future. TaskStateRetry // Indicates that the task is archived and stored for inspection purposes. TaskStateArchived // Indicates that the task is processed successfully and retained until the retention TTL expires. TaskStateCompleted // Indicates that the task is waiting in a group to be aggregated into one task. TaskStateAggregating )

type WorkerInfo ¶ added in v0.14.0

type WorkerInfo struct {

// ID of the task the worker is processing.

TaskID string

// Type of the task the worker is processing.

TaskType string

// Payload of the task the worker is processing.

TaskPayload []byte

// Queue from which the worker got its task.

Queue string

// Time the worker started processing the task.

Started time.Time

// Time the worker needs to finish processing the task by.

Deadline time.Time

}

WorkerInfo describes a running worker processing a task.

Source Files

¶

Source Files

¶

Directories

¶

Directories

¶

| Path | Synopsis |

|---|---|

|

internal

|

|

|

base

Package base defines foundational types and constants used in asynq package.

|

Package base defines foundational types and constants used in asynq package. |

|

errors

Package errors defines the error type and functions used by asynq and its internal packages.

|

Package errors defines the error type and functions used by asynq and its internal packages. |

|

log

Package log exports logging related types and functions.

|

Package log exports logging related types and functions. |

|

rdb

Package rdb encapsulates the interactions with redis.

|

Package rdb encapsulates the interactions with redis. |

|

testbroker

Package testbroker exports a broker implementation that should be used in package testing.

|

Package testbroker exports a broker implementation that should be used in package testing. |

|

testutil

Package testutil defines test helpers for asynq and its internal packages.

|

Package testutil defines test helpers for asynq and its internal packages. |

|

timeutil

Package timeutil exports functions and types related to time and date.

|

Package timeutil exports functions and types related to time and date. |

|

tools

module

|

|

|

x

module

|