Documentation

¶

Documentation

¶

Index ¶

- Constants

- Variables

- func GC()

- func GetDefaultGCEnabled() bool

- func GetDefaultIsRunned() bool

- func GetDefaultIterateInterval() time.Duration

- func IsDisabled() bool

- func IsHiddenTag(tagKey string, tagValue interface{}) bool

- func MemoryReuseEnabled() bool

- func Reset()

- func SetAggregationPeriods(newAggregationPeriods []AggregationPeriod)

- func SetAggregativeBufferSize(newBufferSize uint)

- func SetDefaultGCEnabled(newValue bool)

- func SetDefaultIsRan(newValue bool)

- func SetDefaultPercentiles(p []float64)

- func SetDefaultTags(newDefaultAnyTags AnyTags)

- func SetDisableFastTags(newDisableFastTags bool)

- func SetDisabled(newIsDisabled bool) bool

- func SetHiddenTags(newRawHiddenTags HiddenTags)

- func SetLimit(newLimit uint)

- func SetMemoryReuseEnabled(isEnabled bool)

- func SetMetricsIterateIntervaler(newMetricsIterateIntervaler IterateIntervaler)

- func SetSender(newMetricSender Sender)

- func SetSlicerInterval(newSlicerInterval time.Duration)

- func TagValueToString(vI interface{}) string

- type AggregationPeriod

- type AggregativeStatistics

- type AggregativeValue

- func (aggrV *AggregativeValue) Do(fn func(*AggregativeValue))

- func (aggrV *AggregativeValue) GetAvg() float64

- func (aggrV *AggregativeValue) LockDo(fn func(*AggregativeValue))

- func (r *AggregativeValue) MergeData(e *AggregativeValue)

- func (v *AggregativeValue) Release()

- func (v *AggregativeValue) String() string

- type AggregativeValues

- type AnyTags

- type AtomicFloat64

- type AtomicFloat64Interface

- type AtomicFloat64Ptr

- type AtomicUint64

- type ExceptValues

- type FastTag

- type FastTags

- func (tags *FastTags) Each(fn func(k string, v interface{}) bool)

- func (tags *FastTags) Get(key string) interface{}

- func (tags *FastTags) IsSet(key string) bool

- func (tags *FastTags) Len() int

- func (tags *FastTags) Less(i, j int) bool

- func (tags *FastTags) Release()

- func (tags *FastTags) Set(key string, value interface{}) AnyTags

- func (tags *FastTags) Sort()

- func (tags *FastTags) String() string

- func (tags *FastTags) Swap(i, j int)

- func (tags *FastTags) ToFastTags() *FastTags

- func (tags *FastTags) ToMap(fieldMaps ...map[string]interface{}) map[string]interface{}

- func (tags *FastTags) WriteAsString(writeStringer interface{ ... })

- type HiddenTag

- type HiddenTags

- type IterateIntervaler

- type Metric

- type MetricCount

- func (m *MetricCount) Add(delta int64) int64

- func (m *MetricCount) Get() int64

- func (m *MetricCount) GetFloat64() float64

- func (m *MetricCount) GetType() Type

- func (m *MetricCount) Increment() int64

- func (m *MetricCount) Release()

- func (m *MetricCount) Send(sender Sender)

- func (m *MetricCount) Set(newValue int64)

- func (m *MetricCount) SetValuePointer(newValuePtr *int64)

- type MetricGaugeAggregativeBuffered

- type MetricGaugeAggregativeFlow

- type MetricGaugeAggregativeSimple

- type MetricGaugeFloat64

- func (m *MetricGaugeFloat64) Add(delta float64) float64

- func (m *MetricGaugeFloat64) Get() float64

- func (m *MetricGaugeFloat64) GetFloat64() float64

- func (m *MetricGaugeFloat64) GetType() Type

- func (m *MetricGaugeFloat64) Release()

- func (m *MetricGaugeFloat64) Send(sender Sender)

- func (m *MetricGaugeFloat64) Set(newValue float64)

- func (w *MetricGaugeFloat64) SetValuePointer(newValuePtr *float64)

- type MetricGaugeFloat64Func

- func (m *MetricGaugeFloat64Func) EqualsTo(cmpI iterator) bool

- func (m *MetricGaugeFloat64Func) Get() float64

- func (m *MetricGaugeFloat64Func) GetCommons() *common

- func (m *MetricGaugeFloat64Func) GetFloat64() float64

- func (m *MetricGaugeFloat64Func) GetInterval() time.Duration

- func (m *MetricGaugeFloat64Func) GetType() Type

- func (m *MetricGaugeFloat64Func) IsGCEnabled() bool

- func (m *MetricGaugeFloat64Func) IsRunning() bool

- func (m *MetricGaugeFloat64Func) Iterate()

- func (m *MetricGaugeFloat64Func) MarshalJSON() ([]byte, error)

- func (m *MetricGaugeFloat64Func) Release()

- func (m *MetricGaugeFloat64Func) Run(interval time.Duration)

- func (m *MetricGaugeFloat64Func) Send(sender Sender)

- func (m *MetricGaugeFloat64Func) SetGCEnabled(enabled bool)

- func (m *MetricGaugeFloat64Func) Stop()

- type MetricGaugeInt64

- func (m *MetricGaugeInt64) Add(delta int64) int64

- func (m *MetricGaugeInt64) Decrement() int64

- func (m *MetricGaugeInt64) Get() int64

- func (m *MetricGaugeInt64) GetFloat64() float64

- func (m *MetricGaugeInt64) GetType() Type

- func (m *MetricGaugeInt64) Increment() int64

- func (m *MetricGaugeInt64) Release()

- func (m *MetricGaugeInt64) Send(sender Sender)

- func (m *MetricGaugeInt64) Set(newValue int64)

- func (m *MetricGaugeInt64) SetValuePointer(newValuePtr *int64)

- type MetricGaugeInt64Func

- func (m *MetricGaugeInt64Func) EqualsTo(cmpI iterator) bool

- func (m *MetricGaugeInt64Func) Get() int64

- func (m *MetricGaugeInt64Func) GetCommons() *common

- func (m *MetricGaugeInt64Func) GetFloat64() float64

- func (m *MetricGaugeInt64Func) GetInterval() time.Duration

- func (m *MetricGaugeInt64Func) GetType() Type

- func (m *MetricGaugeInt64Func) IsGCEnabled() bool

- func (m *MetricGaugeInt64Func) IsRunning() bool

- func (m *MetricGaugeInt64Func) Iterate()

- func (m *MetricGaugeInt64Func) MarshalJSON() ([]byte, error)

- func (m *MetricGaugeInt64Func) Release()

- func (m *MetricGaugeInt64Func) Run(interval time.Duration)

- func (m *MetricGaugeInt64Func) Send(sender Sender)

- func (m *MetricGaugeInt64Func) SetGCEnabled(enabled bool)

- func (m *MetricGaugeInt64Func) Stop()

- type MetricTimingBuffered

- type MetricTimingFlow

- type MetricTimingSimple

- type Metrics

- type NonAtomicFloat64

- type Registry

- func (r *Registry) Count(key string, tags AnyTags) *MetricCount

- func (r *Registry) GC()

- func (r *Registry) GaugeAggregativeBuffered(key string, tags AnyTags) *MetricGaugeAggregativeBuffered

- func (r *Registry) GaugeAggregativeFlow(key string, tags AnyTags) *MetricGaugeAggregativeFlow

- func (r *Registry) GaugeAggregativeSimple(key string, tags AnyTags) *MetricGaugeAggregativeSimple

- func (r *Registry) GaugeFloat64(key string, tags AnyTags) *MetricGaugeFloat64

- func (r *Registry) GaugeFloat64Func(key string, tags AnyTags, fn func() float64) *MetricGaugeFloat64Func

- func (r *Registry) GaugeInt64(key string, tags AnyTags) *MetricGaugeInt64

- func (r *Registry) GaugeInt64Func(key string, tags AnyTags, fn func() int64) *MetricGaugeInt64Func

- func (r *Registry) Get(metricType Type, key string, tags AnyTags) Metric

- func (r *Registry) GetDefaultGCEnabled() bool

- func (r *Registry) GetDefaultIsRan() bool

- func (r *Registry) GetDefaultIterateInterval() time.Duration

- func (r *Registry) GetSender() Sender

- func (r *Registry) IsDisabled() bool

- func (r *Registry) IsHiddenTag(tagKey string, tagValue interface{}) bool

- func (r *Registry) List() (result *Metrics)

- func (r *Registry) Register(metric Metric, key string, inTags AnyTags) error

- func (r *Registry) Reset()

- func (r *Registry) Set(metric Metric) error

- func (r *Registry) SetDefaultGCEnabled(newGCEnabledValue bool)

- func (r *Registry) SetDefaultIsRan(newIsRanValue bool)

- func (r *Registry) SetDefaultPercentiles(p []float64)

- func (r *Registry) SetDisabled(newIsDisabled bool) bool

- func (r *Registry) SetHiddenTags(newRawHiddenTags HiddenTags)

- func (r *Registry) SetSender(newMetricSender Sender)

- func (r *Registry) TimingBuffered(key string, tags AnyTags) *MetricTimingBuffered

- func (r *Registry) TimingFlow(key string, tags AnyTags) *MetricTimingFlow

- func (r *Registry) TimingSimple(key string, tags AnyTags) *MetricTimingSimple

- type Sender

- type Spinlock

- type Tags

- func (tags Tags) Copy() Tags

- func (tags Tags) Each(fn func(k string, v interface{}) bool)

- func (tags Tags) Get(key string) interface{}

- func (tags Tags) Keys() (result []string)

- func (tags Tags) Len() int

- func (tags Tags) Release()

- func (tags Tags) Set(key string, value interface{}) AnyTags

- func (tags Tags) String() string

- func (tags Tags) ToFastTags() *FastTags

- func (tags Tags) ToMap(fieldMaps ...map[string]interface{}) map[string]interface{}

- func (tags Tags) WriteAsString(writeStringer interface{ ... })

- type Type

Constants ¶

const ( TypeCount = iota TypeGaugeInt64 TypeGaugeInt64Func TypeGaugeFloat64 TypeGaugeFloat64Func TypeGaugeAggregativeFlow TypeGaugeAggregativeBuffered TypeGaugeAggregativeSimple TypeTimingFlow TypeTimingBuffered TypeTimingSimple )

Variables ¶

var ( // ErrAlreadyExists should never be returned: it's an internal error. // If you get this error then please let us know. ErrAlreadyExists = errors.New(`such metric is already registered`) )

Functions ¶

func GetDefaultGCEnabled ¶

func GetDefaultGCEnabled() bool

func GetDefaultIsRunned ¶

func GetDefaultIsRunned() bool

func IsDisabled ¶

func IsDisabled() bool

func IsHiddenTag ¶

func MemoryReuseEnabled ¶

func MemoryReuseEnabled() bool

MemoryReuseEnabled returns if memory reuse is enabled.

func SetAggregationPeriods ¶

func SetAggregationPeriods(newAggregationPeriods []AggregationPeriod)

SetAggregationPeriods affects only new metrics (it doesn't affect already created on). You may use function `Reset()` to "update" configuration of all metrics.

Every higher aggregation period should be a multiple of the lower one.

func SetAggregativeBufferSize ¶

func SetAggregativeBufferSize(newBufferSize uint)

SetAggregativeBufferSize sets the size of the buffer to be used to store value samples The more this values is the more precise is the percentile value, but more RAM & CPU is consumed. (see "Buffered" in README.md)

func SetDefaultGCEnabled ¶

func SetDefaultGCEnabled(newValue bool)

func SetDefaultIsRan ¶

func SetDefaultIsRan(newValue bool)

func SetDefaultPercentiles ¶

func SetDefaultPercentiles(p []float64)

func SetDefaultTags ¶

func SetDefaultTags(newDefaultAnyTags AnyTags)

func SetDisableFastTags ¶

func SetDisableFastTags(newDisableFastTags bool)

SetDisableFastTags forces to use Tags instead of FastTags. So if SetDisableFastTags(true) is set then NewFastTags() will return "Tags" instead of "FastTags".

This is supposed to be used only for debugging (like to check if there's a bug caused by FastTags).

func SetDisabled ¶

func SetHiddenTags ¶

func SetHiddenTags(newRawHiddenTags HiddenTags)

func SetMemoryReuseEnabled ¶

func SetMemoryReuseEnabled(isEnabled bool)

SetMemoryReuseEnabled defines if memory reuse will be enabled (default -- enabled).

func SetMetricsIterateIntervaler ¶

func SetMetricsIterateIntervaler(newMetricsIterateIntervaler IterateIntervaler)

func SetSender ¶

func SetSender(newMetricSender Sender)

SetSender sets a handler responsible to send metric values to a metrics server (like StatsD)

func SetSlicerInterval ¶

SetSlicerInterval affects only new metrics (it doesn't affect already created one). You may use function `Reset()` to "update" configuration of all metrics.

func TagValueToString ¶

func TagValueToString(vI interface{}) string

Types ¶

type AggregationPeriod ¶

type AggregationPeriod struct {

Interval uint64 // in slicerInterval-s

}

AggregationPeriod is used to define aggregation periods (see "Slicing" in "README.md")

func GetAggregationPeriods ¶

func GetAggregationPeriods() (r []AggregationPeriod)

GetAggregationPeriods returns aggregations periods (see "Slicing" in README.md)

func GetBaseAggregationPeriod ¶

func GetBaseAggregationPeriod() *AggregationPeriod

GetBaseAggregationPeriod returns AggregationPeriod equals to the slicer's interval (see "Slicing" in README.md)

func (*AggregationPeriod) String ¶

func (period *AggregationPeriod) String() string

String returns a string representation of the aggregation period

It will return in a short format (like "5s", "1h") if the amount of seconds could be represented as exact value of days, hours or minutes, or if the amount of seconds is less than 60. Otherwise the format will be like `1h5m0s`.

type AggregativeStatistics ¶

type AggregativeStatistics interface {

// GetPercentile returns the value for a given percentile (0.0 .. 1.0).

// It returns nil if the percentile could not be calculated (it could be in case of using "flow" [instead of

// "buffered"] aggregative metrics)

//

// If you need to calculate multiple percentiles then use GetPercentiles() to get better performance

GetPercentile(percentile float64) *float64

// GetPercentiles returns values for given percentiles (0.0 .. 1.0).

// A value is nil if the percentile could not be calculated.

GetPercentiles(percentile []float64) []*float64

// GetDefaultPercentiles returns default percentiles and its values.

GetDefaultPercentiles() (percentiles []float64, values []float64)

// Set forces all the values in the statistics to be equal to the passed values

Set(staticValue float64)

// ConsiderValue is analog of "Observe" of https://godoc.org/github.com/prometheus/client_golang/prometheus#Observer

// It's used to merge the value to the statistics. For example if there were considered only values 1, 2 and 3 then

// the average value will be 2.

ConsiderValue(value float64)

// Release is used for memory reuse (it's called when it's known that the statistics won't be used anymore)

// This method is not supposed to be called from external code, it designed for internal uses only.

Release()

//

MergeStatistics(AggregativeStatistics)

}

type AggregativeValue ¶

type AggregativeValue struct {

sync.Mutex

Count AtomicUint64

Min AtomicFloat64

Avg AtomicFloat64

Max AtomicFloat64

Sum AtomicFloat64

AggregativeStatistics

}

AggregativeValue is a struct that contains all the values related to an aggregation period.

func (*AggregativeValue) Do ¶

func (aggrV *AggregativeValue) Do(fn func(*AggregativeValue))

Do is like LockDo, but without Lock :)

func (*AggregativeValue) GetAvg ¶

func (aggrV *AggregativeValue) GetAvg() float64

GetAvg just returns the average value

func (*AggregativeValue) LockDo ¶

func (aggrV *AggregativeValue) LockDo(fn func(*AggregativeValue))

LockDo is just a wrapper around Lock()/Unlock(). It's quite handy to understand who caused a deadlock in stack traces.

func (*AggregativeValue) MergeData ¶

func (r *AggregativeValue) MergeData(e *AggregativeValue)

MergeData merges/joins the statistics of the argument.

func (*AggregativeValue) Release ¶

func (v *AggregativeValue) Release()

Release is an opposite to NewAggregativeValue and it saves the variable to a pool to a prevent memory allocation in future. It's not necessary to call this method when you finished to work with an AggregativeValue, but recommended to (for better performance).

func (*AggregativeValue) String ¶

func (v *AggregativeValue) String() string

String returns a JSON string representing values (min, max, count, ...) of an aggregative value

type AggregativeValues ¶

type AggregativeValues struct {

// contains filtered or unexported fields

}

AggregativeValues is a full collection of "AggregativeValue"-s (see "Slicing" in README.md)

func (*AggregativeValues) ByPeriod ¶

func (vs *AggregativeValues) ByPeriod(idx int) *AggregativeValue

func (*AggregativeValues) Current ¶

func (vs *AggregativeValues) Current() *AggregativeValue

func (*AggregativeValues) Last ¶

func (vs *AggregativeValues) Last() *AggregativeValue

func (*AggregativeValues) Total ¶

func (vs *AggregativeValues) Total() *AggregativeValue

type AnyTags ¶

type AnyTags interface {

// Get value of tag by key

Get(key string) interface{}

// Set tag by key and value (if tag by the key does not exist then add it otherwise overwrite the value)

Set(key string, value interface{}) AnyTags

// Each iterates over all tags and passes tag key/value to the function (the argument)

// The function may return false if it's required to finish the loop prematurely

Each(func(key string, value interface{}) bool)

// ToFastTags returns the tags as "*FastTags"

ToFastTags() *FastTags

// ToMap gets the tags as a map, overwrites values by according keys using overwriteMaps and returns

// the result

ToMap(overwriteMaps ...map[string]interface{}) map[string]interface{}

// Release puts tags the structure/slice back to the pool to be reused in future

Release()

// WriteAsString writes tags in StatsD format through the WriteStringer passed as the argument

WriteAsString(interface{ WriteString(string) (int, error) })

// String returns the tags as a string in the StatsD format

String() string

// Len returns the amount/count of tags

Len() int

}

AnyTags is an abstraction over "Tags" and "*FastTags"

func NewFastTags ¶

func NewFastTags() AnyTags

NewFastTags returns an implementation of AnyTags with a full memory reuse support (if SetDisableFastTags(true) is not set).

This implementation is supposed to be used if it's required to reduce a pressure on GC (see "GCCPUFraction", https://golang.org/pkg/runtime/#MemStats).

It could be required if there's a metric that is retrieved very often and it's required to reduce CPU utilization.

If SetDisableFastTags(true) is set then it returns the same as "NewTags" (without full memory reuse).

See "Tags" in README.md

type AtomicFloat64 ¶

type AtomicFloat64 uint64

AtomicFloat64 is an implementation of atomic float64 using uint64 atomic instructions and `math.Float64frombits()`/`math.Float64bits()`

func (*AtomicFloat64) Add ¶

func (f *AtomicFloat64) Add(a float64) float64

Add adds the value to the current one (operator "plus")

func (*AtomicFloat64) AddFast ¶

func (f *AtomicFloat64) AddFast(n float64) float64

AddFast is like Add but without atomicity (faster, but unsafe)

func (*AtomicFloat64) GetFast ¶

func (f *AtomicFloat64) GetFast() float64

GetFast is like Get but without atomicity (faster, but unsafe)

func (*AtomicFloat64) SetFast ¶

func (f *AtomicFloat64) SetFast(n float64)

SetFast is like Set but without atomicity (faster, but unsafe)

type AtomicFloat64Interface ¶

type AtomicFloat64Interface interface {

// Get returns the current value

Get() float64

// Set sets a new value

Set(float64)

// Add adds the value to the current one (operator "plus")

Add(float64) float64

// GetFast is like Get but without atomicity

GetFast() float64

// SetFast is like Set but without atomicity

SetFast(float64)

// AddFast is like Add but without atomicity

AddFast(float64) float64

}

AtomicFloat64Interface is an interface of an atomic float64 implementation It's an abstraction over (*AtomicFloat64) and (*AtomicFloat64Ptr)

type AtomicFloat64Ptr ¶

type AtomicFloat64Ptr struct {

Pointer *float64

}

AtomicFloat64Ptr is like AtomicFloat64 but stores the value in a pointer "*float64" (which in turn could be changed from the outside to point at some other variable).

func (*AtomicFloat64Ptr) Add ¶

func (f *AtomicFloat64Ptr) Add(n float64) float64

Add adds the value to the current one (operator "plus")

func (*AtomicFloat64Ptr) AddFast ¶

func (f *AtomicFloat64Ptr) AddFast(n float64) float64

AddFast is like Add but without atomicity (faster, but unsafe)

func (*AtomicFloat64Ptr) Get ¶

func (f *AtomicFloat64Ptr) Get() float64

Get returns the current value

func (*AtomicFloat64Ptr) GetFast ¶

func (f *AtomicFloat64Ptr) GetFast() float64

GetFast is like Get but without atomicity (faster, but unsafe)

func (*AtomicFloat64Ptr) SetFast ¶

func (f *AtomicFloat64Ptr) SetFast(n float64)

SetFast is like Set but without atomicity (faster, but unsafe)

type AtomicUint64 ¶

type AtomicUint64 uint64

AtomicUint64 is just a handy wrapper for uint64 with atomic primitives.

func (*AtomicUint64) Add ¶

func (v *AtomicUint64) Add(a uint64) uint64

Add adds the value to the current one (operator "plus")

type ExceptValues ¶

type ExceptValues []interface{}

type FastTag ¶

FastTag is an element of FastTags (see "FastTags")

func (*FastTag) GetValue ¶

func (tag *FastTag) GetValue() interface{}

GetValue returns the value of the tag. It returns it as an int64 if the value could be represented as an integer, or as a string if it cannot be represented as an integer.

type FastTags ¶

type FastTags struct {

Slice []*FastTag

// contains filtered or unexported fields

}

func GetDefaultTags ¶

func GetDefaultTags() *FastTags

func (*FastTags) Each ¶

Each is a function to call function "fn" for each tag. A key and a value of a tag will be passed as "k" and "v" arguments, accordingly.

func (*FastTags) Get ¶

Get returns the value of the tag with key "key".

If there's no such tag then nil will be returned.

func (*FastTags) Less ¶

Less returns if the Key of the tag by index "i" is less (strings comparison) than the Key of the tag by index "j".

func (*FastTags) Release ¶

func (tags *FastTags) Release()

Release clears the tags and puts the them back into the pool. It's required for memory reusing.

See "Tags" in README.md

func (*FastTags) Set ¶

Set sets the value of the tag with key "key" to "value". If there's no such tag then creates it and sets the value.

func (*FastTags) Sort ¶

func (tags *FastTags) Sort()

Sort sorts tags by keys (using Swap, Less and Len)

func (*FastTags) ToFastTags ¶

ToFastTags does nothing and returns the same tags.

This method is required to implement interface "AnyTags".

type HiddenTag ¶

type HiddenTag struct {

Key string

ExceptValues ExceptValues

}

type HiddenTags ¶

type HiddenTags []HiddenTag

type IterateIntervaler ¶

type Metric ¶

type Metric interface {

Iterate()

GetInterval() time.Duration

Run(time.Duration)

Stop()

Send(Sender)

GetKey() []byte

GetType() Type

GetName() string

GetTags() *FastTags

GetFloat64() float64

IsRunning() bool

Release()

IsGCEnabled() bool

SetGCEnabled(bool)

GetTag(string) interface{}

Registry() *Registry

// contains filtered or unexported methods

}

type MetricCount ¶

type MetricCount struct {

// contains filtered or unexported fields

}

MetricCount is the type of a "Count" metric.

Count metric is an analog of prometheus' "Counter", see: https://godoc.org/github.com/prometheus/client_golang/prometheus#Counter

func Count ¶

func Count(key string, tags AnyTags) *MetricCount

Count returns a metric of type "MetricCount".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

func (*MetricCount) Add ¶

Add adds (+) the value of "delta" to the internal value and returns the result

func (*MetricCount) GetFloat64 ¶

func (m *MetricCount) GetFloat64() float64

GetFloat64 returns the current internal value as float64 (the same as `float64(Get())`)

func (*MetricCount) GetType ¶

func (m *MetricCount) GetType() Type

GetType always returns "TypeCount" (because of type "MetricCount")

func (*MetricCount) Increment ¶

func (m *MetricCount) Increment() int64

Increment is an analog of Add(1). It just adds "1" to the internal value and returns the result.

func (*MetricCount) Release ¶

func (m *MetricCount) Release()

func (*MetricCount) Send ¶

func (m *MetricCount) Send(sender Sender)

Send initiates a sending of the internal value via the sender

func (*MetricCount) Set ¶

func (m *MetricCount) Set(newValue int64)

Set overwrites the internal value by the value of the argument "newValue"

func (*MetricCount) SetValuePointer ¶

func (m *MetricCount) SetValuePointer(newValuePtr *int64)

SetValuePointer sets another pointer to be used to store the internal value of the metric

type MetricGaugeAggregativeBuffered ¶

type MetricGaugeAggregativeBuffered struct {

// contains filtered or unexported fields

}

MetricGaugeAggregativeBuffered is an aggregative/summarizive metric (like "average", "percentile 99" and so on). It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeBuffered uses the "Buffered" method to aggregate the statistics (see "Buffered" in README.md)

func GaugeAggregativeBuffered ¶

func GaugeAggregativeBuffered(key string, tags AnyTags) *MetricGaugeAggregativeBuffered

GaugeAggregativeBuffered returns a metric of type "MetricGaugeAggregativeBuffered".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeAggregativeBuffered is an aggregative/summarizive metric (like "average", "percentile 99" and so on). It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeBuffered uses the "Buffered" method to aggregate the statistics (see "Buffered" in README.md)

func (*MetricGaugeAggregativeBuffered) ConsiderValue ¶

func (m *MetricGaugeAggregativeBuffered) ConsiderValue(v float64)

ConsiderValue adds a value to the statistics, it's an analog of prometheus' "Observe" (see https://godoc.org/github.com/prometheus/client_golang/prometheus#Summary)

func (*MetricGaugeAggregativeBuffered) GetType ¶

func (m *MetricGaugeAggregativeBuffered) GetType() Type

GetType always returns TypeGaugeAggregativeBuffered (because of object type "MetricGaugeAggregativeBuffered")

func (*MetricGaugeAggregativeBuffered) NewAggregativeStatistics ¶

func (m *MetricGaugeAggregativeBuffered) NewAggregativeStatistics() AggregativeStatistics

NewAggregativeStatistics returns a "Buffered" (see "Buffered" in README.md) implementation of AggregativeStatistics.

func (*MetricGaugeAggregativeBuffered) Release ¶

func (m *MetricGaugeAggregativeBuffered) Release()

type MetricGaugeAggregativeFlow ¶

type MetricGaugeAggregativeFlow struct {

// contains filtered or unexported fields

}

MetricGaugeAggregativeFlow is an aggregative/summarizive metric (like "average", "percentile 99" and so on).. It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeFlow uses the "Flow" method to aggregate the statistics (see "Flow" in README.md)

func GaugeAggregativeFlow ¶

func GaugeAggregativeFlow(key string, tags AnyTags) *MetricGaugeAggregativeFlow

GaugeAggregativeFlow returns a metric of type "MetricGaugeAggregativeFlow".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeAggregativeFlow is an aggregative/summarizive metric (like "average", "percentile 99" and so on).. It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeFlow uses the "Flow" method to aggregate the statistics (see "Flow" in README.md)

func (*MetricGaugeAggregativeFlow) ConsiderValue ¶

func (m *MetricGaugeAggregativeFlow) ConsiderValue(v float64)

ConsiderValue adds a value to the statistics, it's an analog of prometheus' "Observe" (see https://godoc.org/github.com/prometheus/client_golang/prometheus#Summary)

func (*MetricGaugeAggregativeFlow) GetType ¶

func (m *MetricGaugeAggregativeFlow) GetType() Type

GetType always returns TypeGaugeAggregativeFlow (because of object type "MetricGaugeAggregativeFlow")

func (*MetricGaugeAggregativeFlow) NewAggregativeStatistics ¶

func (m *MetricGaugeAggregativeFlow) NewAggregativeStatistics() AggregativeStatistics

NewAggregativeStatistics returns a "Flow" (see "Flow" in README.md) implementation of AggregativeStatistics.

func (*MetricGaugeAggregativeFlow) Release ¶

func (m *MetricGaugeAggregativeFlow) Release()

type MetricGaugeAggregativeSimple ¶

type MetricGaugeAggregativeSimple struct {

// contains filtered or unexported fields

}

MetricGaugeAggregativeSimple is an aggregative/summarizive metric (like "average", "percentile 99" and so on).. It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeSimple uses the "Simple" method to aggregate the statistics (see "Simple" in README.md)

func GaugeAggregativeSimple ¶

func GaugeAggregativeSimple(key string, tags AnyTags) *MetricGaugeAggregativeSimple

GaugeAggregativeSimple returns a metric of type "MetricGaugeAggregativeSimple".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeAggregativeSimple is an aggregative/summarizive metric (like "average", "percentile 99" and so on).. It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeSimple uses the "Simple" method to aggregate the statistics (see "Simple" in README.md)

func (*MetricGaugeAggregativeSimple) ConsiderValue ¶

func (m *MetricGaugeAggregativeSimple) ConsiderValue(v float64)

ConsiderValue adds a value to the statistics, it's an analog of prometheus' "Observe" (see https://godoc.org/github.com/prometheus/client_golang/prometheus#Summary)

func (*MetricGaugeAggregativeSimple) GetType ¶

func (m *MetricGaugeAggregativeSimple) GetType() Type

GetType always returns TypeGaugeAggregativeSimple (because of object type "MetricGaugeAggregativeSimple")

func (*MetricGaugeAggregativeSimple) NewAggregativeStatistics ¶

func (m *MetricGaugeAggregativeSimple) NewAggregativeStatistics() AggregativeStatistics

NewAggregativeStatistics returns nil

"Simple" doesn't calculate percentile values, so it doesn't have specific aggregative statistics, so "nil"

See "Simple" in README.md

func (*MetricGaugeAggregativeSimple) Release ¶

func (m *MetricGaugeAggregativeSimple) Release()

type MetricGaugeFloat64 ¶

type MetricGaugeFloat64 struct {

// contains filtered or unexported fields

}

MetricGaugeFloat64 is just a gauge metric which stores the value as float64. It's an analog of "Gauge" metric of prometheus, see: https://prometheus.io/docs/concepts/metric_types/#gauge

func GaugeFloat64 ¶

func GaugeFloat64(key string, tags AnyTags) *MetricGaugeFloat64

GaugeFloat64 returns a metric of type "MetricGaugeFloat64".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeFloat64 is just a gauge metric which stores the value as float64. It's an analog of "Gauge" metric of prometheus, see: https://prometheus.io/docs/concepts/metric_types/#gauge

func (*MetricGaugeFloat64) Add ¶

Add adds (+) the value of "delta" to the internal value and returns the result

func (*MetricGaugeFloat64) Get ¶

func (m *MetricGaugeFloat64) Get() float64

Get returns the current internal value

func (*MetricGaugeFloat64) GetFloat64 ¶

func (m *MetricGaugeFloat64) GetFloat64() float64

GetFloat64 returns the current internal value

(the same as `Get` for float64 metrics)

func (*MetricGaugeFloat64) GetType ¶

func (m *MetricGaugeFloat64) GetType() Type

GetType always returns TypeGaugeFloat64 (because of object type "MetricGaugeFloat64")

func (*MetricGaugeFloat64) Release ¶

func (m *MetricGaugeFloat64) Release()

func (*MetricGaugeFloat64) Send ¶

func (m *MetricGaugeFloat64) Send(sender Sender)

Send initiates a sending of the internal value via the sender

func (*MetricGaugeFloat64) Set ¶

func (m *MetricGaugeFloat64) Set(newValue float64)

Set overwrites the internal value by the value of the argument "newValue"

func (*MetricGaugeFloat64) SetValuePointer ¶

func (w *MetricGaugeFloat64) SetValuePointer(newValuePtr *float64)

SetValuePointer sets another pointer to be used to store the internal value of the metric

type MetricGaugeFloat64Func ¶

type MetricGaugeFloat64Func struct {

// contains filtered or unexported fields

}

MetricGaugeFloat64Func is a gauge metric which uses a float64 value returned by a function.

This metric is the same as MetricGaugeFloat64, but uses a function as a source of values.

func GaugeFloat64Func ¶

func GaugeFloat64Func(key string, tags AnyTags, fn func() float64) *MetricGaugeFloat64Func

GaugeFloat64Func returns a metric of type "MetricGaugeFloat64Func".

MetricGaugeFloat64Func is a gauge metric which uses a float64 value returned by the function "fn".

This metric is the same as MetricGaugeFloat64, but uses the function "fn" as a source of values.

Usually if somebody uses this metrics it requires to disable the GC: `metric.SetGCEnabled(false)`

func (*MetricGaugeFloat64Func) EqualsTo ¶

func (m *MetricGaugeFloat64Func) EqualsTo(cmpI iterator) bool

EqualsTo checks if it's the same metric passed as the argument

func (*MetricGaugeFloat64Func) Get ¶

func (m *MetricGaugeFloat64Func) Get() float64

func (*MetricGaugeFloat64Func) GetCommons ¶

func (m *MetricGaugeFloat64Func) GetCommons() *common

GetCommons returns the *common of a metric (it supposed to be used for internal routines only). The "*common" is a structure that is common through all types of metrics (with GC info, registry info and so on).

func (*MetricGaugeFloat64Func) GetFloat64 ¶

func (m *MetricGaugeFloat64Func) GetFloat64() float64

func (*MetricGaugeFloat64Func) GetInterval ¶

GetInterval return the iteration interval (between sending or GC checks)

func (*MetricGaugeFloat64Func) GetType ¶

func (m *MetricGaugeFloat64Func) GetType() Type

func (*MetricGaugeFloat64Func) IsGCEnabled ¶

func (m *MetricGaugeFloat64Func) IsGCEnabled() bool

IsGCEnabled returns if the GC enabled for this metric (see method `SetGCEnabled`)

func (*MetricGaugeFloat64Func) IsRunning ¶

func (m *MetricGaugeFloat64Func) IsRunning() bool

IsRunning returns if the metric is run()'ed and not Stop()'ed.

func (*MetricGaugeFloat64Func) Iterate ¶

func (m *MetricGaugeFloat64Func) Iterate()

Iterate runs routines supposed to be runned once per selected interval. This routines are sending the metric value via sender (see `SetSender`) and GC (to remove the metric if it is not used for a long time).

func (*MetricGaugeFloat64Func) MarshalJSON ¶

MarshalJSON returns JSON representation of a metric for external monitoring systems

func (*MetricGaugeFloat64Func) Release ¶

func (m *MetricGaugeFloat64Func) Release()

func (*MetricGaugeFloat64Func) Run ¶

Run starts the metric. We did not check if it is safe to call this method from external code. Not recommended to use, yet. Metrics starts automatically after it's creation, so there's no need to call this method, usually.

func (*MetricGaugeFloat64Func) Send ¶

func (m *MetricGaugeFloat64Func) Send(sender Sender)

func (*MetricGaugeFloat64Func) SetGCEnabled ¶

func (m *MetricGaugeFloat64Func) SetGCEnabled(enabled bool)

SetGCEnabled sets if this metric could be stopped and removed from the metrics registry if the value do not change for a long time

type MetricGaugeInt64 ¶

type MetricGaugeInt64 struct {

// contains filtered or unexported fields

}

MetricGaugeInt64 is just a gauge metric which stores the value as int64. It's an analog of "Gauge" metric of prometheus, see: https://prometheus.io/docs/concepts/metric_types/#gauge

func GaugeInt64 ¶

func GaugeInt64(key string, tags AnyTags) *MetricGaugeInt64

GaugeInt64 returns a metric of type "MetricGaugeFloat64".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeInt64 is just a gauge metric which stores the value as int64. It's an analog of "Gauge" metric of prometheus, see: https://prometheus.io/docs/concepts/metric_types/#gauge

func (*MetricGaugeInt64) Add ¶

Add adds (+) the value of "delta" to the internal value and returns the result

func (*MetricGaugeInt64) Decrement ¶

func (m *MetricGaugeInt64) Decrement() int64

Decrement is an analog of Add(-1). It just subtracts "1" from the internal value and returns the result.

func (*MetricGaugeInt64) Get ¶

func (m *MetricGaugeInt64) Get() int64

Get returns the current internal value

func (*MetricGaugeInt64) GetFloat64 ¶

func (m *MetricGaugeInt64) GetFloat64() float64

GetFloat64 returns the current internal value as float64 (the same as `float64(Get())`)

func (*MetricGaugeInt64) GetType ¶

func (m *MetricGaugeInt64) GetType() Type

GetType always returns TypeGaugeInt64 (because of object type "MetricGaugeInt64")

func (*MetricGaugeInt64) Increment ¶

func (m *MetricGaugeInt64) Increment() int64

Increment is an analog of Add(1). It just adds "1" to the internal value and returns the result.

func (*MetricGaugeInt64) Release ¶

func (m *MetricGaugeInt64) Release()

func (*MetricGaugeInt64) Send ¶

func (m *MetricGaugeInt64) Send(sender Sender)

Send initiates a sending of the internal value via the sender

func (*MetricGaugeInt64) Set ¶

func (m *MetricGaugeInt64) Set(newValue int64)

Set overwrites the internal value by the value of the argument "newValue"

func (*MetricGaugeInt64) SetValuePointer ¶

func (m *MetricGaugeInt64) SetValuePointer(newValuePtr *int64)

SetValuePointer sets another pointer to be used to store the internal value of the metric

type MetricGaugeInt64Func ¶

type MetricGaugeInt64Func struct {

// contains filtered or unexported fields

}

func GaugeInt64Func ¶

func GaugeInt64Func(key string, tags AnyTags, fn func() int64) *MetricGaugeInt64Func

func (*MetricGaugeInt64Func) EqualsTo ¶

func (m *MetricGaugeInt64Func) EqualsTo(cmpI iterator) bool

EqualsTo checks if it's the same metric passed as the argument

func (*MetricGaugeInt64Func) Get ¶

func (m *MetricGaugeInt64Func) Get() int64

func (*MetricGaugeInt64Func) GetCommons ¶

func (m *MetricGaugeInt64Func) GetCommons() *common

GetCommons returns the *common of a metric (it supposed to be used for internal routines only). The "*common" is a structure that is common through all types of metrics (with GC info, registry info and so on).

func (*MetricGaugeInt64Func) GetFloat64 ¶

func (m *MetricGaugeInt64Func) GetFloat64() float64

func (*MetricGaugeInt64Func) GetInterval ¶

GetInterval return the iteration interval (between sending or GC checks)

func (*MetricGaugeInt64Func) GetType ¶

func (m *MetricGaugeInt64Func) GetType() Type

func (*MetricGaugeInt64Func) IsGCEnabled ¶

func (m *MetricGaugeInt64Func) IsGCEnabled() bool

IsGCEnabled returns if the GC enabled for this metric (see method `SetGCEnabled`)

func (*MetricGaugeInt64Func) IsRunning ¶

func (m *MetricGaugeInt64Func) IsRunning() bool

IsRunning returns if the metric is run()'ed and not Stop()'ed.

func (*MetricGaugeInt64Func) Iterate ¶

func (m *MetricGaugeInt64Func) Iterate()

Iterate runs routines supposed to be runned once per selected interval. This routines are sending the metric value via sender (see `SetSender`) and GC (to remove the metric if it is not used for a long time).

func (*MetricGaugeInt64Func) MarshalJSON ¶

MarshalJSON returns JSON representation of a metric for external monitoring systems

func (*MetricGaugeInt64Func) Release ¶

func (m *MetricGaugeInt64Func) Release()

func (*MetricGaugeInt64Func) Run ¶

Run starts the metric. We did not check if it is safe to call this method from external code. Not recommended to use, yet. Metrics starts automatically after it's creation, so there's no need to call this method, usually.

func (*MetricGaugeInt64Func) Send ¶

func (m *MetricGaugeInt64Func) Send(sender Sender)

func (*MetricGaugeInt64Func) SetGCEnabled ¶

func (m *MetricGaugeInt64Func) SetGCEnabled(enabled bool)

SetGCEnabled sets if this metric could be stopped and removed from the metrics registry if the value do not change for a long time

type MetricTimingBuffered ¶

type MetricTimingBuffered struct {

// contains filtered or unexported fields

}

func TimingBuffered ¶

func TimingBuffered(key string, tags AnyTags) *MetricTimingBuffered

func (*MetricTimingBuffered) ConsiderValue ¶

func (m *MetricTimingBuffered) ConsiderValue(v time.Duration)

func (*MetricTimingBuffered) GetType ¶

func (m *MetricTimingBuffered) GetType() Type

func (*MetricTimingBuffered) NewAggregativeStatistics ¶

func (m *MetricTimingBuffered) NewAggregativeStatistics() AggregativeStatistics

NewAggregativeStatistics returns a "Buffered" (see "Buffered" in README.md) implementation of AggregativeStatistics.

func (*MetricTimingBuffered) Release ¶

func (m *MetricTimingBuffered) Release()

type MetricTimingFlow ¶

type MetricTimingFlow struct {

// contains filtered or unexported fields

}

func TimingFlow ¶

func TimingFlow(key string, tags AnyTags) *MetricTimingFlow

func (*MetricTimingFlow) ConsiderValue ¶

func (m *MetricTimingFlow) ConsiderValue(v time.Duration)

func (*MetricTimingFlow) GetType ¶

func (m *MetricTimingFlow) GetType() Type

func (*MetricTimingFlow) NewAggregativeStatistics ¶

func (m *MetricTimingFlow) NewAggregativeStatistics() AggregativeStatistics

NewAggregativeStatistics returns a "Flow" (see "Flow" in README.md) implementation of AggregativeStatistics.

func (*MetricTimingFlow) Release ¶

func (m *MetricTimingFlow) Release()

type MetricTimingSimple ¶

type MetricTimingSimple struct {

// contains filtered or unexported fields

}

func TimingSimple ¶

func TimingSimple(key string, tags AnyTags) *MetricTimingSimple

func (*MetricTimingSimple) ConsiderValue ¶

func (m *MetricTimingSimple) ConsiderValue(v time.Duration)

func (*MetricTimingSimple) GetType ¶

func (m *MetricTimingSimple) GetType() Type

func (*MetricTimingSimple) NewAggregativeStatistics ¶

func (m *MetricTimingSimple) NewAggregativeStatistics() AggregativeStatistics

NewAggregativeStatistics returns nil

"Simple" doesn't calculate percentile values, so it doesn't have specific aggregative statistics, so "nil"

See "Simple" in README.md

func (*MetricTimingSimple) Release ¶

func (m *MetricTimingSimple) Release()

type NonAtomicFloat64 ¶

type NonAtomicFloat64 float64

NonAtomicFloat64 just an implementation of AtomicFloat64Interface without any atomicity

Supposed to be used for debugging, only

func (*NonAtomicFloat64) Add ¶

func (f *NonAtomicFloat64) Add(a float64) float64

Add adds the value to the current one (operator "plus")

func (*NonAtomicFloat64) AddFast ¶

func (f *NonAtomicFloat64) AddFast(n float64) float64

AddFast is the same as Add

func (*NonAtomicFloat64) Get ¶

func (f *NonAtomicFloat64) Get() float64

Get returns the current value

func (*NonAtomicFloat64) GetFast ¶

func (f *NonAtomicFloat64) GetFast() float64

GetFast is the same as Get

func (*NonAtomicFloat64) SetFast ¶

func (f *NonAtomicFloat64) SetFast(n float64)

SetFast is the same as Set

type Registry ¶

type Registry struct {

// contains filtered or unexported fields

}

func (*Registry) Count ¶

func (r *Registry) Count(key string, tags AnyTags) *MetricCount

Count returns a metric of type "MetricCount".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

func (*Registry) GaugeAggregativeBuffered ¶

func (r *Registry) GaugeAggregativeBuffered(key string, tags AnyTags) *MetricGaugeAggregativeBuffered

GaugeAggregativeBuffered returns a metric of type "MetricGaugeAggregativeBuffered".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeAggregativeBuffered is an aggregative/summarizive metric (like "average", "percentile 99" and so on). It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeBuffered uses the "Buffered" method to aggregate the statistics (see "Buffered" in README.md)

func (*Registry) GaugeAggregativeFlow ¶

func (r *Registry) GaugeAggregativeFlow(key string, tags AnyTags) *MetricGaugeAggregativeFlow

GaugeAggregativeFlow returns a metric of type "MetricGaugeAggregativeFlow".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeAggregativeFlow is an aggregative/summarizive metric (like "average", "percentile 99" and so on).. It's an analog of prometheus' "Summary" (see https://prometheus.io/docs/concepts/metric_types/#summary).

MetricGaugeAggregativeFlow uses the "Flow" method to aggregate the statistics (see "Flow" in README.md)

func (*Registry) GaugeAggregativeSimple ¶

func (r *Registry) GaugeAggregativeSimple(key string, tags AnyTags) *MetricGaugeAggregativeSimple

func (*Registry) GaugeFloat64 ¶

func (r *Registry) GaugeFloat64(key string, tags AnyTags) *MetricGaugeFloat64

GaugeFloat64 returns a metric of type "MetricGaugeFloat64".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeFloat64 is just a gauge metric which stores the value as float64. It's an analog of "Gauge" metric of prometheus, see: https://prometheus.io/docs/concepts/metric_types/#gauge

func (*Registry) GaugeFloat64Func ¶

func (r *Registry) GaugeFloat64Func(key string, tags AnyTags, fn func() float64) *MetricGaugeFloat64Func

GaugeFloat64Func returns a metric of type "MetricGaugeFloat64Func".

MetricGaugeFloat64Func is a gauge metric which uses a float64 value returned by the function "fn".

This metric is the same as MetricGaugeFloat64, but uses the function "fn" as a source of values.

Usually if somebody uses this metrics it requires to disable the GC: `metric.SetGCEnabled(false)`

func (*Registry) GaugeInt64 ¶

func (r *Registry) GaugeInt64(key string, tags AnyTags) *MetricGaugeInt64

GaugeInt64 returns a metric of type "MetricGaugeFloat64".

For the same key and tags it will return the same metric.

If there's no such metric then it will create it, register it in the registry and return it. If there's already such metric then it will just return the metric.

MetricGaugeInt64 is just a gauge metric which stores the value as int64. It's an analog of "Gauge" metric of prometheus, see: https://prometheus.io/docs/concepts/metric_types/#gauge

func (*Registry) GaugeInt64Func ¶

func (r *Registry) GaugeInt64Func(key string, tags AnyTags, fn func() int64) *MetricGaugeInt64Func

func (*Registry) GetDefaultGCEnabled ¶

func (*Registry) GetDefaultIsRan ¶

func (*Registry) GetDefaultIterateInterval ¶

func (*Registry) IsDisabled ¶

func (*Registry) IsHiddenTag ¶

func (*Registry) SetDefaultGCEnabled ¶

func (*Registry) SetDefaultIsRan ¶

func (*Registry) SetDefaultPercentiles ¶

func (*Registry) SetDisabled ¶

func (*Registry) SetHiddenTags ¶

func (r *Registry) SetHiddenTags(newRawHiddenTags HiddenTags)

func (*Registry) TimingBuffered ¶

func (r *Registry) TimingBuffered(key string, tags AnyTags) *MetricTimingBuffered

func (*Registry) TimingFlow ¶

func (r *Registry) TimingFlow(key string, tags AnyTags) *MetricTimingFlow

func (*Registry) TimingSimple ¶

func (r *Registry) TimingSimple(key string, tags AnyTags) *MetricTimingSimple

type Sender ¶

type Sender interface {

// SendInt64 is used to send signed integer values

SendInt64(metric Metric, key string, value int64) error

// SendUint64 is used to send unsigned integer values

SendUint64(metric Metric, key string, value uint64) error

// SendFloat64 is used to send float values

SendFloat64(metric Metric, key string, value float64) error

}

Sender is a sender to be used to periodically send metric values (for example to StatsD) On high loaded systems we recommend to use prometheus and a status page with all exported metrics instead of sending metrics to somewhere.

Source Files

¶

Source Files

¶

- any_tags.go

- atomic_float64.go

- atomic_uint64.go

- common.go

- common_aggregative.go

- common_aggregative_buffered.go

- common_aggregative_flow.go

- common_aggregative_simple.go

- common_float64.go

- common_int64.go

- consider_value_queue.go

- count.go

- errors.go

- fast_tags.go

- fast_tags_sort.go

- gauge_aggregative_buffered.go

- gauge_aggregative_flow.go

- gauge_aggregative_simple.go

- gauge_float64.go

- gauge_float64_func.go

- gauge_int64.go

- gauge_int64_func.go

- hidden_tags.go

- iterators.go

- metric.go

- metrics.go

- pools.go

- recover_panic.go

- registry.go

- registry_item.go

- spinlock.go

- tags.go

- timing_buffered.go

- timing_flow.go

- timing_simple.go

- type.go

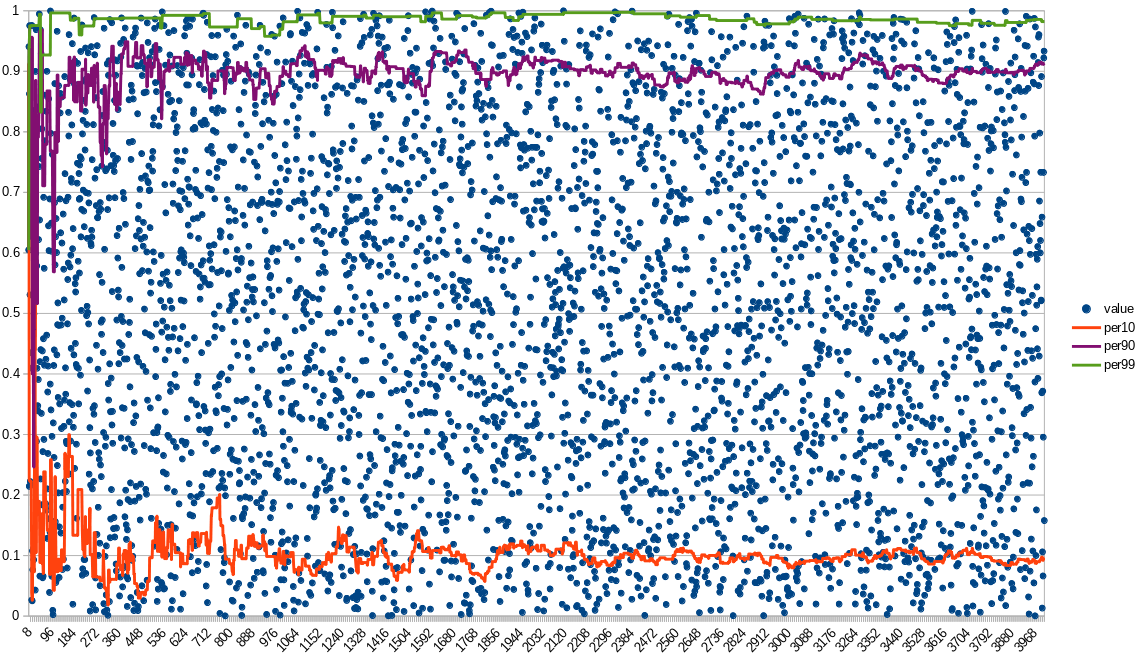

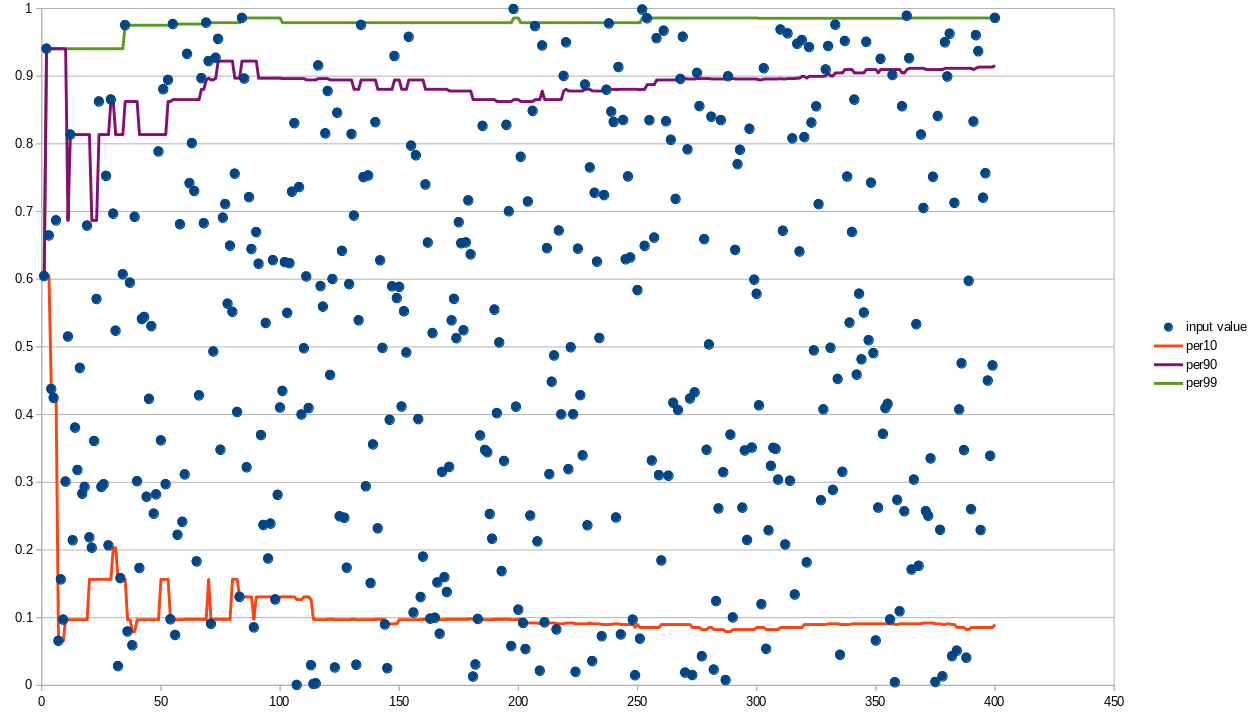

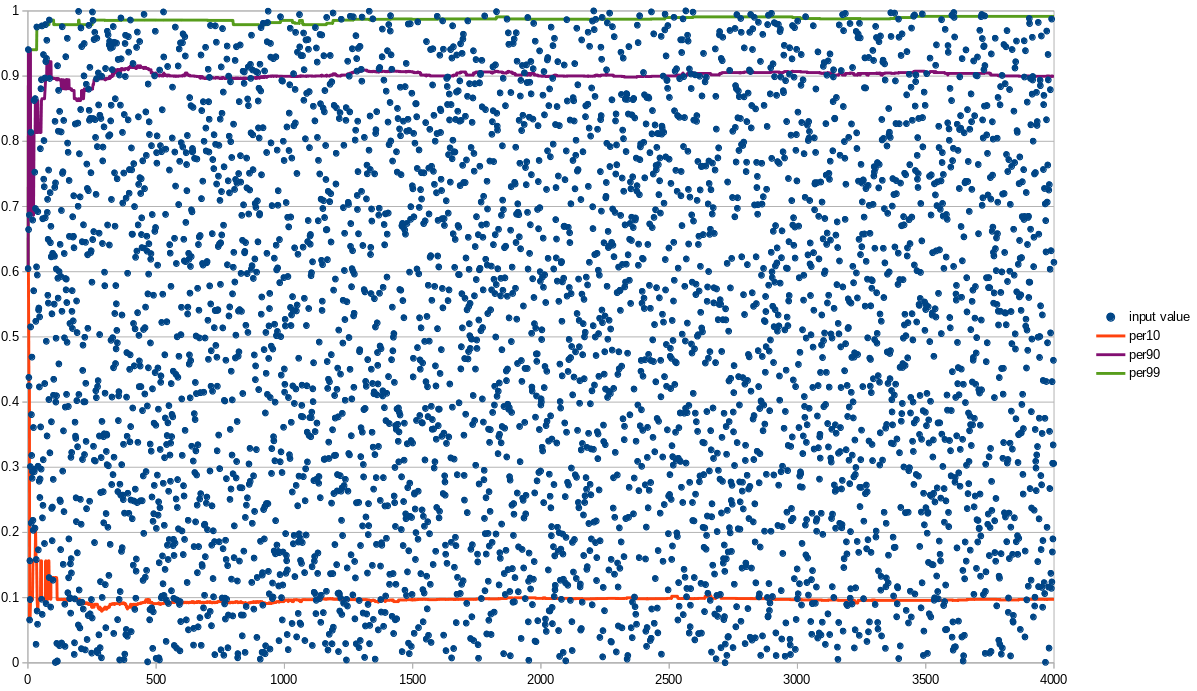

(400 events)

(400 events) (4000 events)

(4000 events) (on this graph the percentile values are absolutely correct, because there's less than 1000 events)

(on this graph the percentile values are absolutely correct, because there's less than 1000 events) (4000 events)

(4000 events)